What is Flaky Test?

What is a Flaky Test?

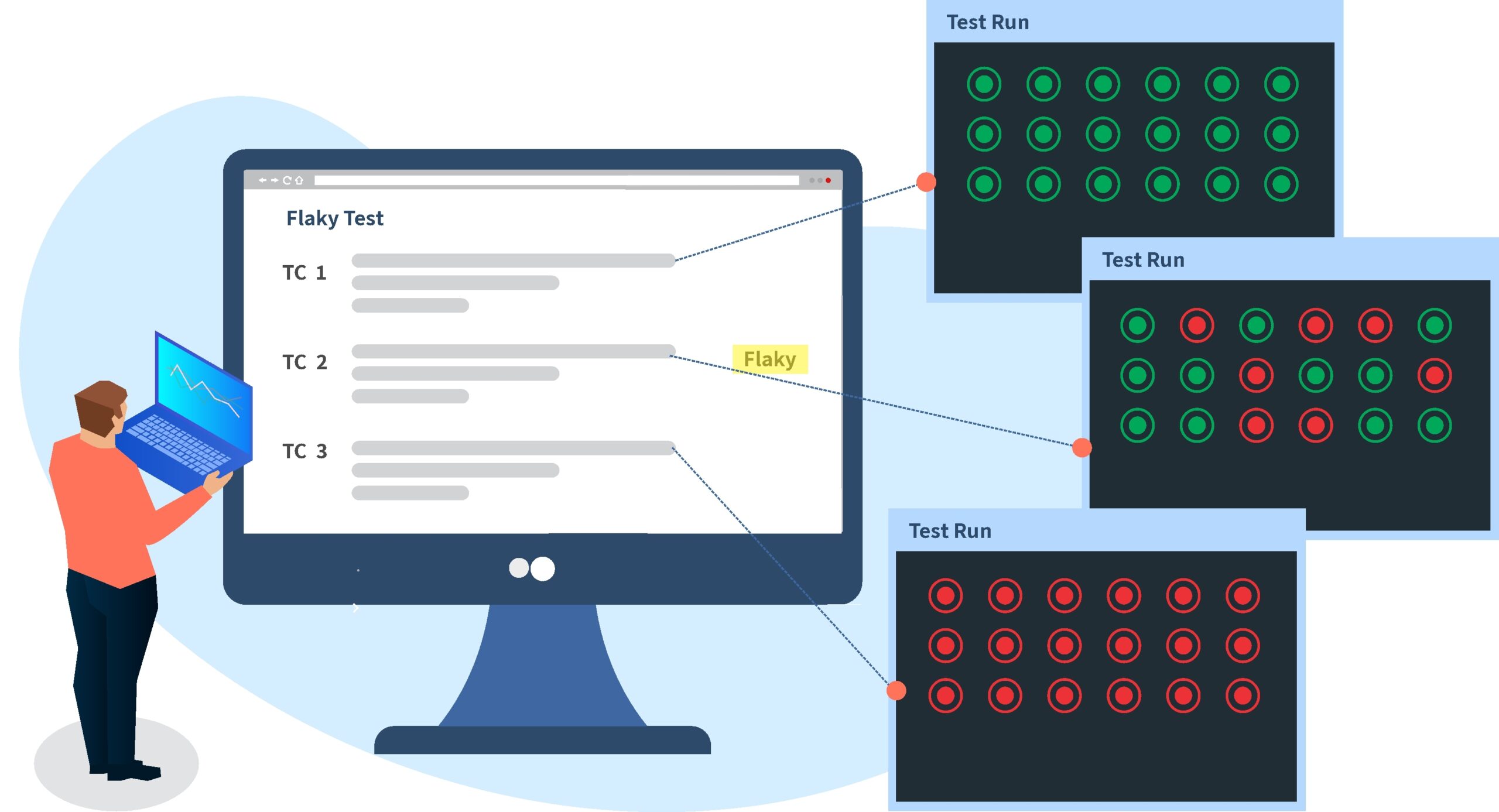

A flaky test refers to testing that generates inconsistent results, failing or passing unpredictably, without any modifications to the code under testing.

Unlike reliable tests, which yield the same results consistently, flaky tests create uncertainty, posing challenges for software development teams.

Characteristics of Flaky Tests

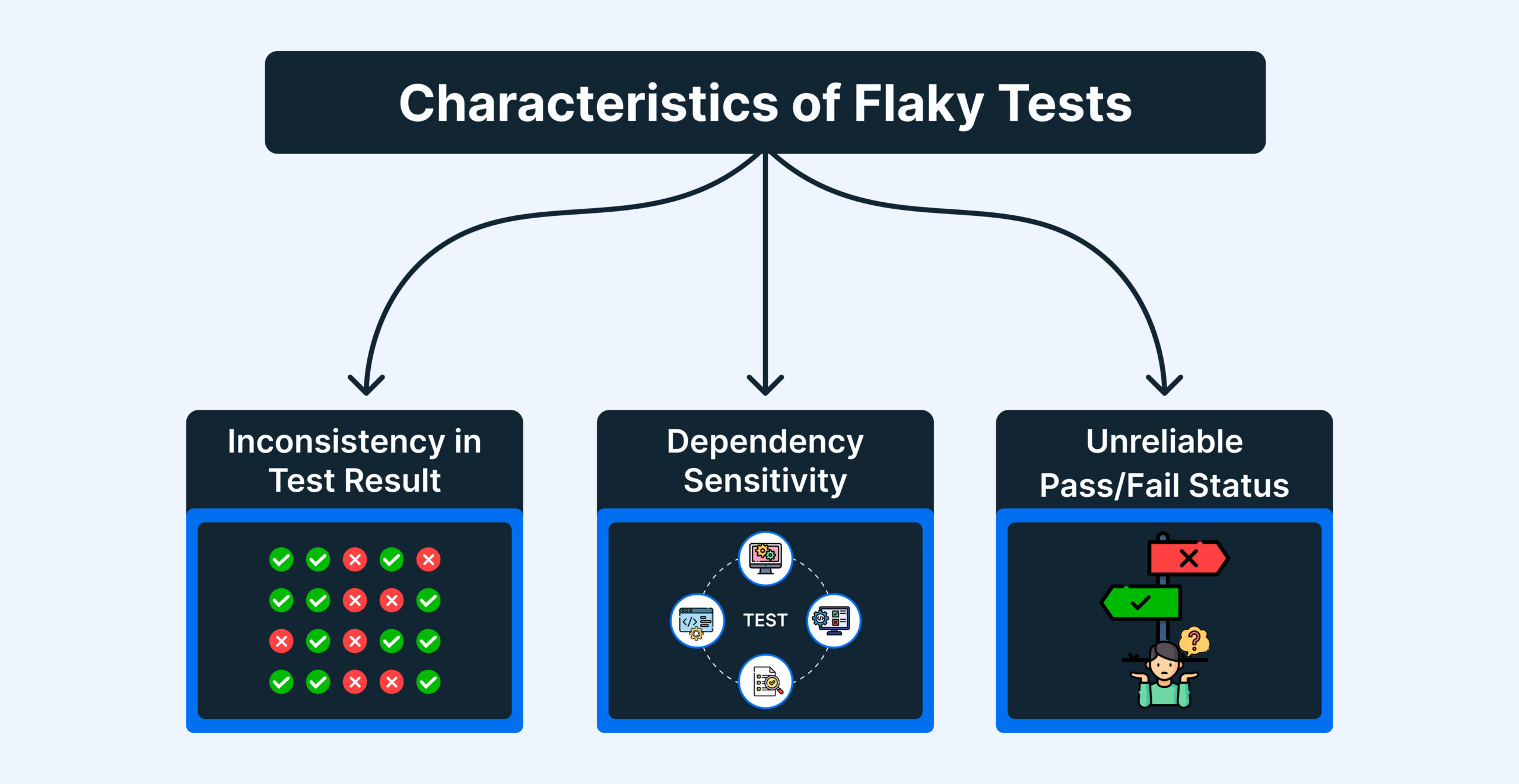

Some of the common characteristics of Flaky Tests are:

- Inconsistency: Flaky tests display variability in their results, making it challenging to ascertain a definitive pass or fail status. As the status changes from pass to fail randomly, without any real pattern demonstrating inconsistent behavior.

- Unreliable Pass/Fail Status: The pass or fail status of flaky tests is unreliable, causing ambiguity in the assessment of software quality. Sometimes the status is Pass and sometimes Fail, which makes it unreliable as it needs further verification before conclusions can be made.

- Dependency Sensitivity: Flaky tests are often sensitive to external factors, such as shared resources or network conditions, further amplifying their unpredictable nature.

What causes Flaky Tests?

Understanding the root causes of flaky tests is crucial for devising effective strategies to mitigate their impact.

- Concurrency Issues: Flaky tests may arise when multiple tests compete for shared resources concurrently, leading to race conditions.

- External Dependencies: Interaction with external systems, like APIs or databases, introduces flakiness due to factors such as network latency and varying response times.

- Non-deterministic behavior: When the test relies on random or unpredictable elements, such as dates, times, UUIDs, or user input.

- Unstable environment: When the test environment is not isolated, controlled, or consistent, leading to variations in performance, availability, or configuration.

- Insufficient assertions: When the test does not check all the relevant aspects of the expected behavior or outcome, leaving room for false positives or negatives.

- Flawed logic: When the test contains bugs, typos, or errors in the code or the logic that affect its functionality or validity.

Why is Flaky Test detection important?

Flaky tests can have serious consequences for software quality and development.

According to a study by Google, flaky tests accounted for 16% of all test failures in their system. They also found that flaky tests took 1.5 times longer to fix than non-flaky ones.

Another study by Microsoft estimated that flaky tests cost them $1.14 million per year in terms of developer time.

Some of the reasons why flaky test detection is important are:

- Flaky tests undermine confidence in the test results and the software quality. If a test fails intermittently, it is hard to know if it is due to a real defect in the code or a flakiness issue. This can lead to false alarms, missed bugs, or wasted time and resources.

- Flaky tests slow down the development process and increase costs. If a test fails randomly, it may require multiple reruns, manual verification, or debugging to determine the root cause. This can delay the delivery of features, fixes, or releases and consume valuable resources and budget.

- Flaky tests affect team morale and productivity. If a team has to deal with frequent flaky test failures, it can cause frustration, stress, or distrust among developers, testers, and managers. This can lower the team’s motivation, performance, and collaboration.

How to detect Flaky Tests?

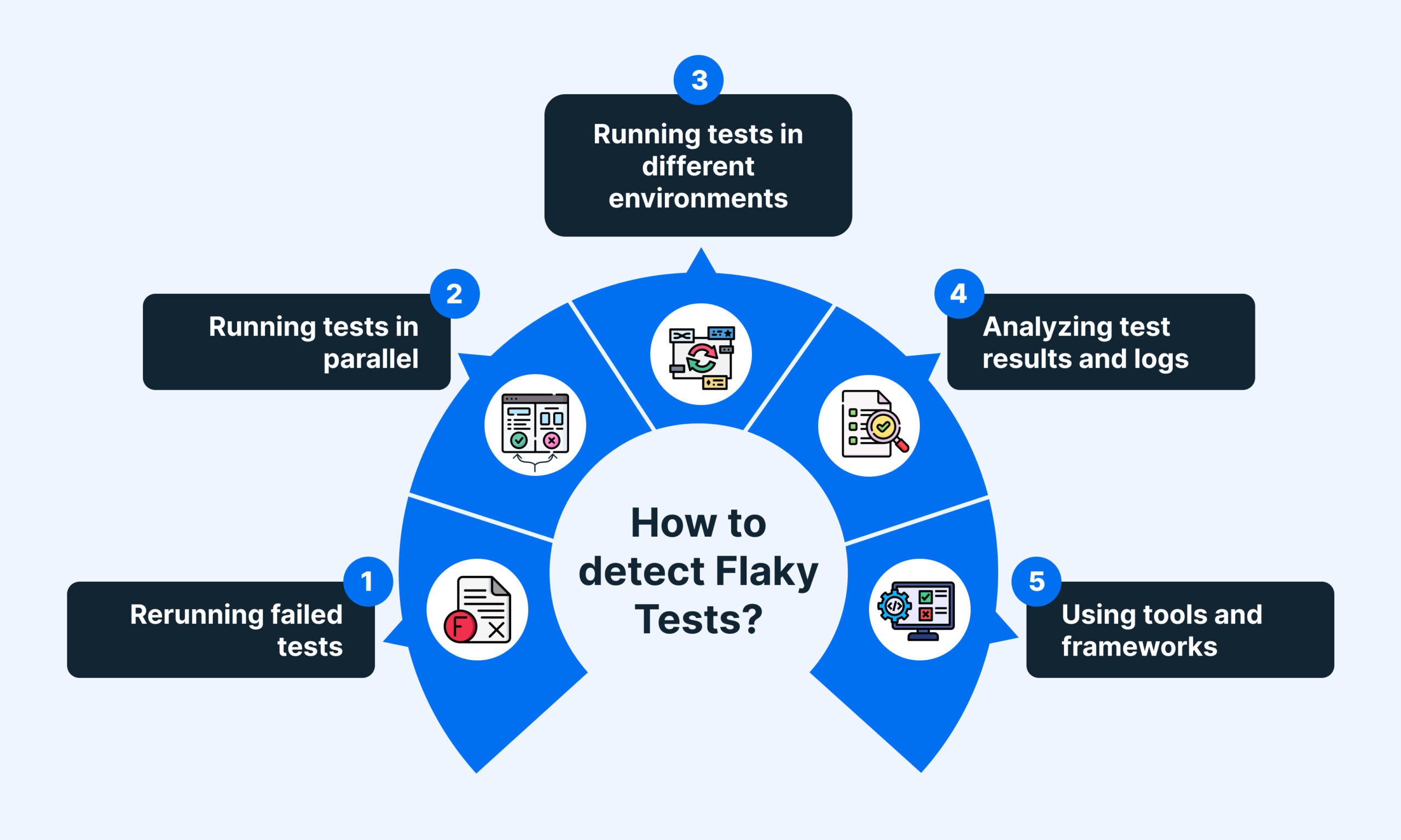

Detecting flaky tests can be challenging, as they may not manifest themselves consistently or frequently. However, some possible ways to detect flaky tests are:

- Rerunning failed tests:

If a test fails once but passes on subsequent runs without any changes in the code or the environment, it is likely a flaky test. - Running tests in parallel:

If a test passes when run alone but fails when run with other tests in parallel, it may indicate a race condition or a test order dependency issue. - Running tests in different environments:

If a test passes in one environment but fails in another with different settings, configurations, or resources, it may suggest an unstable environment issue. - Analyzing test results and logs:

If a test produces inconsistent or ambiguous results or logs across different runs, it may imply a non-deterministic behavior or an insufficient assertion issue. - Using tools and frameworks:

There are various frameworks and tools like BrowserStack Test Observability available that can help detect and diagnose flaky tests automatically.

Learn More about Test Observability

How to Fix Flaky Tests?

Fixing flaky tests can be difficult, as they may require a deep understanding of the code, the test, and the system under test. However, here are some general methods to fix flaky tests:

1. Isolate the test

- Make sure the test does not depend on or affect any external factors or other tests.

- Use mocks, stubs, or fakes to simulate or replace dependencies. Use dedicated or disposable resources for each test run.

- Reset or clean up the state before and after each test.

2. Eliminate the randomness

- Make sure the test does not rely on any random or unpredictable elements.

- Use fixed or predefined values for dates, times, UUIDs, or user input.

- Use deterministic algorithms or methods for generating or processing data.

3. Increase the robustness of the test

- Make sure the test can handle different scenarios and conditions.

- Use retries, timeouts, or waits to deal with network or performance issues.

- Use assertions that check for ranges, patterns, or approximations instead of exact values.

- Use assertions that verify all the relevant aspects of the expected behavior or outcome.

4. Simplify the logic of test script

- Make sure the test is clear, concise, and correct.

- Use descriptive names, comments, and logs to explain the purpose and functionality of the test.

- Use modular, reusable, and maintainable code and logic.

- Avoid bugs, typos, or errors in the code or the logic.

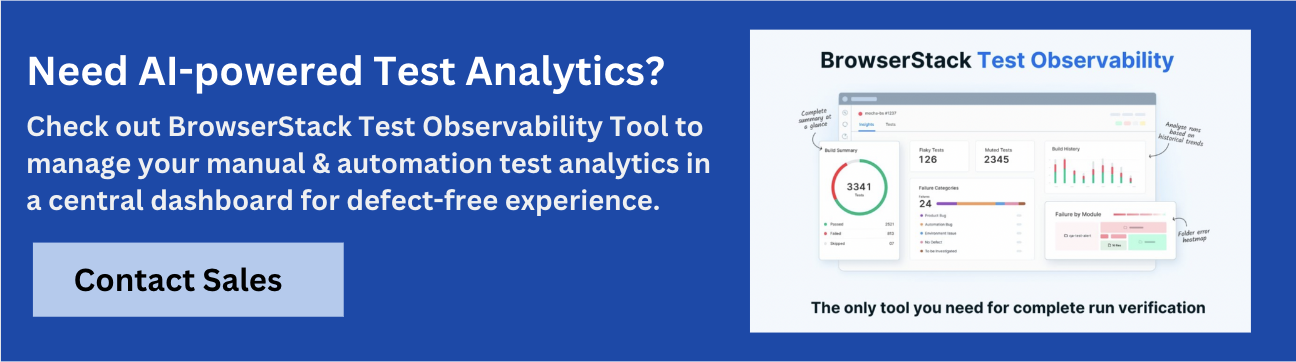

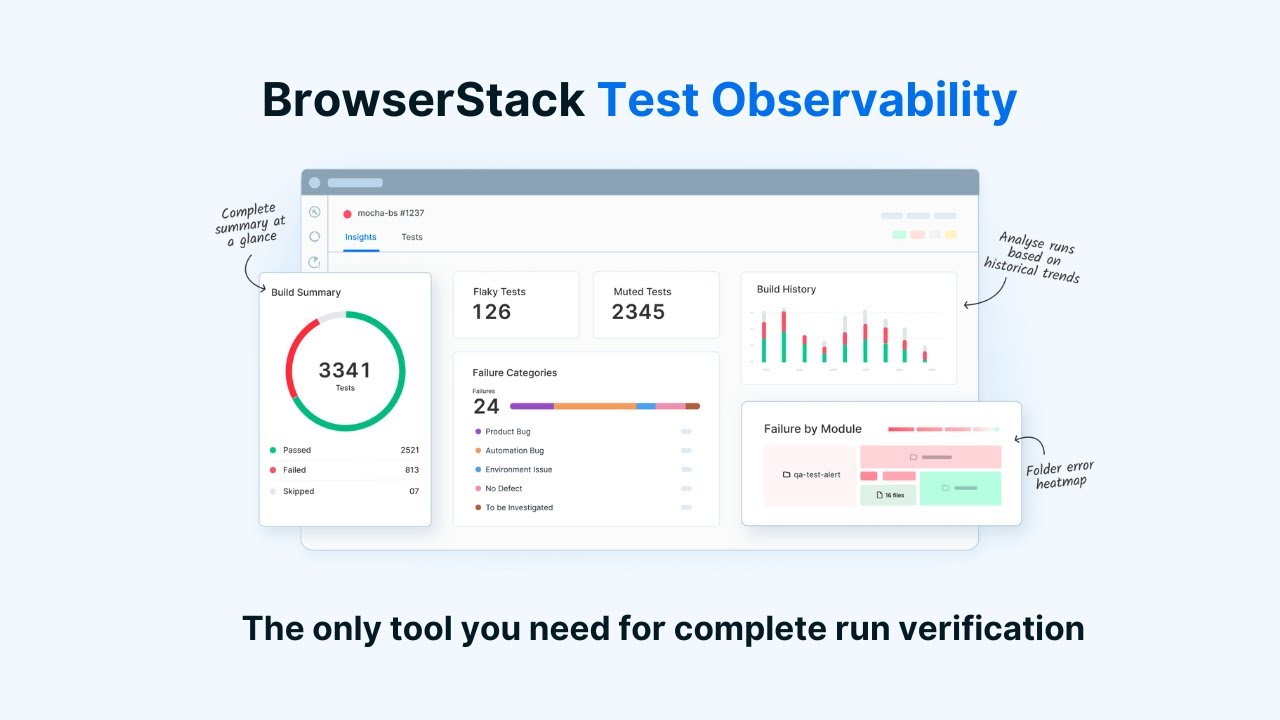

How to manage Flaky Tests using BrowserStack Test Observability

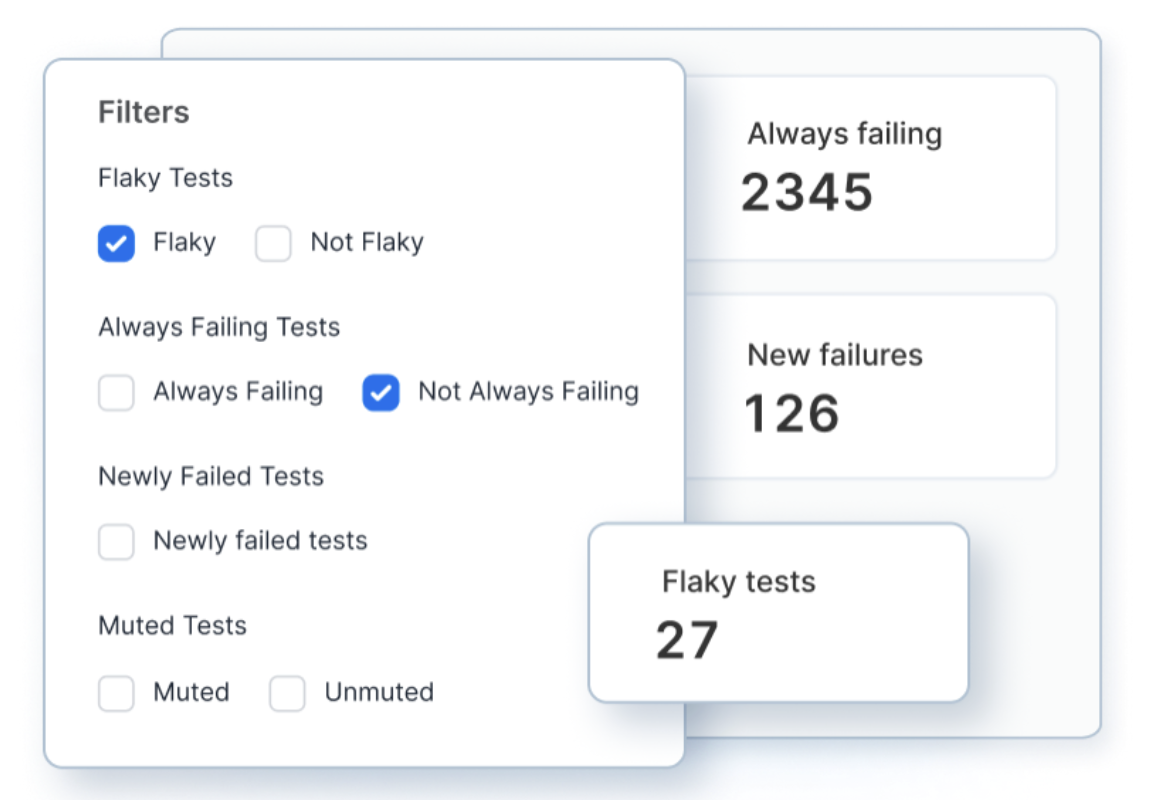

BrowserStack Test Observability is the ultimate solution for test reporting and debugging, which helps filter real test failures with auto-tagging into:

- Flaky,

- Always Failing

- New Failures

- Performance Anomaly

Supports a wide range of test frameworks and out of the box.

To manage Flaky Tests seamlessly, BrowserStack Observability provides you with:

- Smart tags that automatically flag failures into flaky, always-failing, and new-failures

- AI-based Auto Failure Analysis that automatically maps each failure into customisable categories like Product Bug, Environment Issue, Automation Bug and more.

- Timeline Debugging that allows you to debug with every single log in one pane. You can even travel back to any previous test execution to gain more context.

- Out of box Graphs and reports that track the stability and performance of your test suite

- Re-run tests directly on CI from the Test Observability dashboard

- Integration with Jira to file and track bugs

- Customizable dashboards that allow you to slice and dice your test run data across different projects and runs

- Unique Error Analysis that helps you debug faster by identifying common errors causing multiple failures in a build

- Customizable Alerts that notify you in real-time when certain quality rules are compromised in your test suite

With BrowserStack Test Observability, you can save time, reduce efforts, and improve the quality of your automation. You can also monitor your test suite metrics, set up custom alerts, and collaborate with your team more effectively.

Don’t let flaky tests slow you down.

Try BrowserStack Test Observability

Best Practices to Reduce Flaky Tests

Flaky tests are inevitable in software testing, but they can be reduced and prevented by following some best practices. Some of them are:

- Write tests that are clear, concise, correct, and consistent.

- Follow coding standards and conventions. Use tools and frameworks that support quality testing.

- Review tests for correctness, completeness, and coverage.

- Refactor tests to improve readability, maintainability, and performance.

- Remove or replace obsolete, redundant, or duplicate tests.

- Run tests on every code change, commit, or merge.

- Run tests in different environments, configurations, and scenarios.

- Run tests in parallel or distributed mode to increase speed and efficiency.

- Report test results and failures in a timely and transparent manner.

- Use dashboards, charts, or graphs to visualize test data and trends.

- Use notifications, alerts, or tickets to communicate test issues and actions.

- Analyze flaky test failures and root causes.

- Document flaky test cases and solutions.

- Share flaky test learnings and best practices with the team.

Conclusion

Flaky tests are a common and costly problem in software testing that can affect software quality and development. Flaky tests can be detected, fixed, and managed by using various techniques, tools, and best practices. BrowserStack Test Observability is a feature that can help users monitor, debug, and optimize their tests on BrowserStack effectively.

Flaky tests are not easy to deal with, but they are not impossible to overcome either. By following the guidelines and tips in this article, users can reduce flaky tests in their projects and achieve reliable and trustworthy testing outcomes.