Web Scraping using Beautiful Soup

By Sakshi Pandey, Community Contributor - December 12, 2024

The web is packed with valuable data, but manually gathering it is time-consuming. Web scraping automates this process, and Python’s Beautiful Soup makes it easy.

This guide will show you how to extract, parse, and manipulate web data efficiently with Beautiful Soup, which will help you turn online information into actionable insights.

What is Web Scraping and Why is it Important?

Web scraping is the act of scraping information from a web application. Where screen scraping allows users to scrape visible data from the webpage, web scraping is able to delve deeper and obtain the HTML code laying under it.

Web scraping can be used to extract all data from a website or to scrape certain information the user requires. For example instead of scraping an article, all of the reviews of the article, and the ratings a user may instead only scrape the comments in order to gather what the general sentiment is towards the article in question.

Automated web scraping expedites the data gathering process and allows users to gather large amounts of data which can then be used to gain insights. The emphasis on data analysis, sentiment analysis,and machine learning in today’s day and age has made web scraping an invaluable tool for any IT professional.

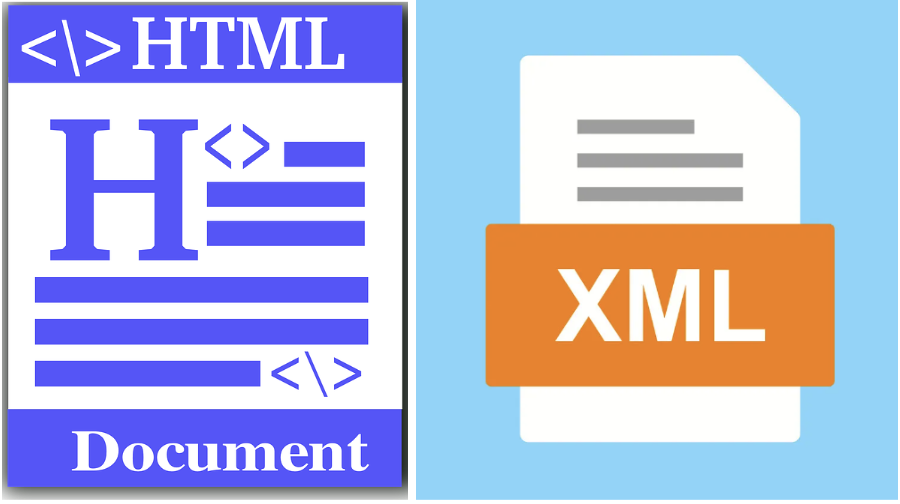

What is BeautifulSoup?

The name BeautifulSoup explains the purpose of this package well. It can be used to separate and pull out data required by the user from the soup that HTML and XML files are by creating a tree of python objects. It can pull data through various means such as tags and NavigableString.

How to do Web Scraping with Beautiful Soup?

Before understanding the method to perform Web Scraping using Selenium Python and Beautiful, it is important to have all the prerequisites ready in place.

Pre-Requisites:

1. Set up a Python Environment. This tutorial uses Python 3.11.4.

2. Install Selenium, the pip package installer is the most efficient method for this and can be used to directly install it from the conda terminal, linux terminal, or anaconda prompt.

pip install selenium3. Install BeautifulSoup with the pip package installer as well.

pip install beautifulsoup44. Download the latest WebDriver for the browser you wish to use, or install webdriver_manager to get the latest webdriver for the browser you wish to use.

pip install webdriver_managerThe versions of the aforementioned packages used for this tutorial are:

- BeautifulSoup 4.12.2

- Pandas 2.0.2

- Selenium 4.10.0

- Webdriver_Manager 3.8.6

Steps for Web Scraping with Beautiful Soup

Follow the steps below to perform webscraping with Beautiful Soup:

Step 1: Import the packages required for the script.

from selenium import webdriver from selenium.webdriver.chrome.service import Service from selenium.webdriver.support.ui import WebDriverWait from selenium.webdriver.support import expected_conditions as EC from bs4 import BeautifulSoup import pandas as pd import re from webdriver_manager.chrome import ChromeDriverManager

Selenium will be required to automate the chrome browser, and since Selenium uses the webdriver protocol we will require the webdriver_manager package to obtain a ChromeDriver compatible with the version of the browser we’ll be using. Selenium will also be used to scrape the webpage.

BeautifulSoup is needed to parse the HTML of the webpage. Re is imported in order to use regex to match the user input keyword. Pandas will be used to write our keyword, the matches found, and the number of occurrences into an excel file.

Step 2: Obtain the version of ChromeDriver compatible with the browser being used.

driver=webdriver.Chrome(service=Service(ChromeDriverManager().install()))

Step 3: Take the user’s input for the URL of a webpage to scrape.

val = input("Enter a url:") wait = WebDriverWait(driver, 10) driver.get(val) get_url = driver.current_url wait.until(EC.url_to_be(val)) if get_url == val: page_source = driver.page_source

For this example, the user input is: https://www.browserstack.com/guide/cross-browser-testing-on-wix-websites

The driver gets this URL and then a wait command is needed before proceeding to the next step, to ensure that the page is loaded.

Step 4: Use BeautifulSoup to parse the HTML scraped from the webpage.

soup = BeautifulSoup(page_source,features="html.parser")

A soup object is created from the HTML scraped from the webpage.

Step 5: Parse the soup for User Input Keywords.

multiple=input("Would you like to enter multiple keywords?(Y/N)") if multiple == "Y": keywords=[] matches=[] len_match=[] num_keyword=input("How many keywords would you like to enter?") count=int(num_keyword) while count != 0: keyword=input("Enter a keyword to find instances of in the article:") keywords.append(keyword) match=soup.body.find_all(string=re.compile(keyword)) matches.append(match) len_match.append(len(match)) count -= 1 df=pd.DataFrame({"Keyword":pd.Series(keywords),"Number of Matches": pd.Series(len_match),"Matches":pd.Series(matches)}) elif multiple == "N": keyword=input("Enter a keyword to find instances of in the article:") matches = soup.body.find_all(string=re.compile(keyword)) len_match = len(matches) df=pd.DataFrame({"Keyword":pd.Series(keyword),"Number of Matches": pd.Series(len_match), "Matches":pd.Series(matches)}) else: print("Error, invalid character entered.")

A user input is taken to determine whether the webpage needs to be searched for multiple keywords. If it does then multiple keyword inputs are taken from the user, matches are parsed from the soup object, and the number of matches is determined. If the user doesn’t want to search for multiple keywords then these functions are performed for a singular keyword. The results in both cases are stored in a dataframe. Otherwise an error message is displayed.

Step 6: Store the data collected into an excel file.

df.to_excel("Keywords.xlsx", index=False) driver.quit()

Scenario: Write the dataframe into an excel file titled Keywords.xlsx and quit the driver.

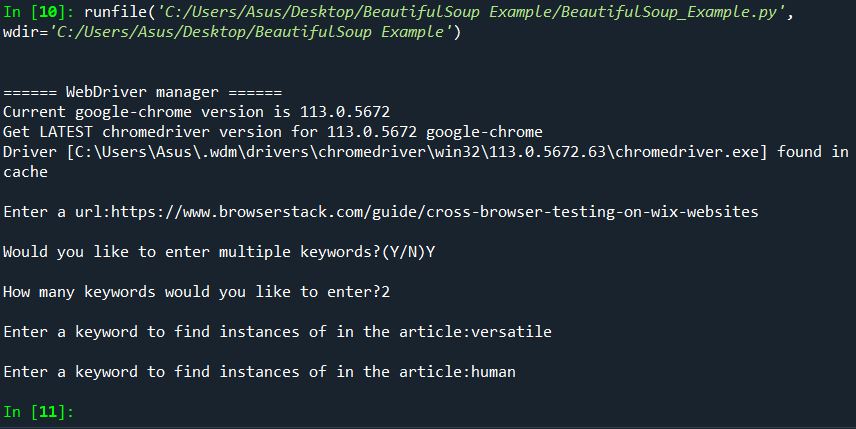

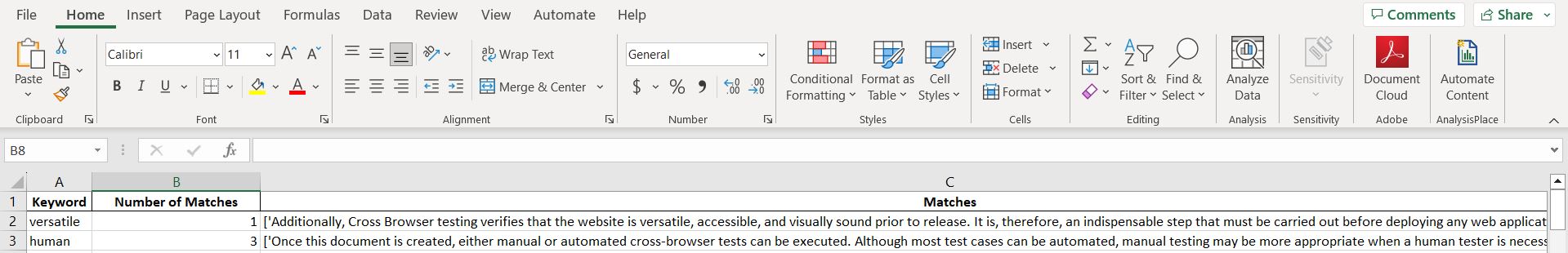

Output:

Excel File Output:

The keywords, matches found for the keywords, and the number of matches found can be visualized in the excel file.

Web Scraping Ethically

Although web scraping is legal, there are some potential ethical and legal issues that may arise from it. For example copyright infringement, and downloading any information that is obviously meant to be private is an ethical violation. Many academic journals and newspapers require paid subscriptions from users who wish to access their content.

Downloading these articles and journal papers is a violation, and could lead to serious consequences. Many other problems such as overloading a server with requests and causing the site to slow down or even run out of resources and crash can arise from web scraping.

Therefore it’s vital to communicate with publishers or website owners to ensure that you’re not violating any policies or rules while web scraping their content.

Why should you run Python Tests on Real Devices?

Running Python tests on real devices ensures your application functions correctly under real-world conditions, offering several critical advantages like:

- Identify device-specific issues like hardware or OS differences.

- Test apps in real-world conditions, including touch and gestures.

- Measure real performance metrics like battery, CPU, and memory.

- Test under varying network conditions (Wi-Fi, 4G, 5G, etc.).

- Ensure features like push notifications and GPS work correctly.

- Validate app installation, updates, and uninstallation.

- Comply with app store guidelines requiring real-device testing.

- Debug issues using real-time logs and crash reports.

Why choose BrowserStack to run Python Tests?

Running Python tests on BrowserStack Automate offers several advantages:

- Extensive Device and Browser Coverage: Availability of 3500+ real device-OS-browser combinations ensures your application performs consistently across different environments.

- Parallel Test Execution: Accelerate your testing process by running multiple tests simultaneously, reducing overall test duration.

- CI/CD Integration: Seamlessly integrate with popular CI/CD tools like Jenkins and CircleCI to automate testing and catch issues early in development, including time zone-related bugs.

- Browser Compatibility: Run tests on multiple browsers simultaneously to ensure consistent functionality and appearance for users in diverse environments.

- Seamless Integration: BrowserStack supports various Python testing frameworks, allowing for easy integration into your existing test suites.

- Advanced Debugging Tools: Utilize features like screenshots, video recordings, and detailed logs to quickly identify and resolve issues.

- Local Testing Capabilities: Test applications hosted on internal or staging environments securely using BrowserStack’s Local Testing feature.

- Scalability: Effortlessly scale your testing infrastructure without the need to maintain physical devices or complex setups.

Conclusion

Web scraping with Python and Beautiful Soup empowers you to extract and process valuable data from the web efficiently. By mastering these tools, you can automate data collection, streamline workflows, and gain actionable insights. Beautiful Soup’s simplicity and versatility make it an essential library for developers looking to unlock the potential of web data.

Take your web scraping projects to the next level with BrowserStack Automate. Test your web scraping Python scripts on real devices and browsers, ensuring compatibility and performance across diverse environments. With features like parallel testing, CI/CD integration, and advanced debugging tools, Automate helps you deliver reliable and efficient web data extraction solutions every time.