Web scraping has become a vital tool for data extraction, helping businesses and researchers gather information efficiently. Selenium, a widely used web automation tool, is one of the go-to solutions for scraping dynamic websites.

However, with the rise of advanced anti-bot detection systems, basic Selenium scripts often fail to bypass these barriers. Here is where Selenium Stealth Mode helps in successful web scraping in challenging environments.

Overview

What is Selenium Stealth Mode?

Selenium Stealth Mode modifies browser behavior to mimic real user interactions, helping bypass anti-bot detection systems during web scraping.

Benefits of Selenium Stealth Mode

- Reduces the likelihood of detection and blocking.

- Bypasses CAPTCHAs and anti-bot systems effectively.

- Ensures smoother and uninterrupted web scraping.

How Does Selenium Stealth Mode Work?

- Tweaks browser attributes like headers and user-agent strings.

- Disables WebDriver flags to mask automation.

- Simulates realistic user activity patterns (mouse movements, clicks, etc.).

- Reduces traceability by avoiding identifiable browser signatures.

This guide walks you through Selenium stealth mode, its benefits, challenges, and ethical considerations, ensuring you scrape responsibly and effectively.

What is Selenium Stealth Mode?

Selenium Stealth Mode modifies browser behavior to make automation scripts mimic real user interactions. It helps avoid detection by anti-bot systems, enabling smoother navigation and data extraction from websites with stringent security protocols.

This anti-bots system employs several techniques to detect bots and automation tools. I.e. detecting headless browsing modes, missing or inconsistent HTTP headers, checking WebDriver-specific attributes in the browser environment, request frequencies etc.

Stealth mode addresses these weaknesses by tweaking browser configurations to appear more human-like.

Challenges with Bot Detection during Web Scraping

First, understand how anti-bot systems detect automation tools. Anti-bot systems are designed to identify and block automated tools by analyzing inconsistencies in browser behavior and network requests.

That’s why they pose a significant challenge to web scraping automation tools like Selenium. Here are the main methods they use, which are a challenge to be tackled by automation tools:

- Headless Browsing Detection: Anti-bot systems check for missing visual elements or flags like navigator.webdriver to detect headless browsers.

- Inconsistent HTTP Headers: Missing or generic headers like outdated User-Agent strings raise suspicion.

- Unusual Interaction Patterns: Instant clicks, linear navigation, or lack of mouse movements signal bot activity.

- Browser Fingerprinting: Parameters like WebGL details, screen resolution, and installed plugins help identify bots.

- Request Frequency: High request rates or extended sessions from the same IP address are flagged as suspicious.

- IP and Geolocation Tracking: Repetitive requests from a single IP or mismatched geolocation can indicate bot behavior.

- JavaScript and DOM Manipulation: Bots struggling with dynamic content or interacting with hidden elements may get blocked.

- CAPTCHA Challenges: Bots are thwarted by image or text-based CAPTCHAs requiring human interaction.

Read More: Headless Browser Testing with Selenium

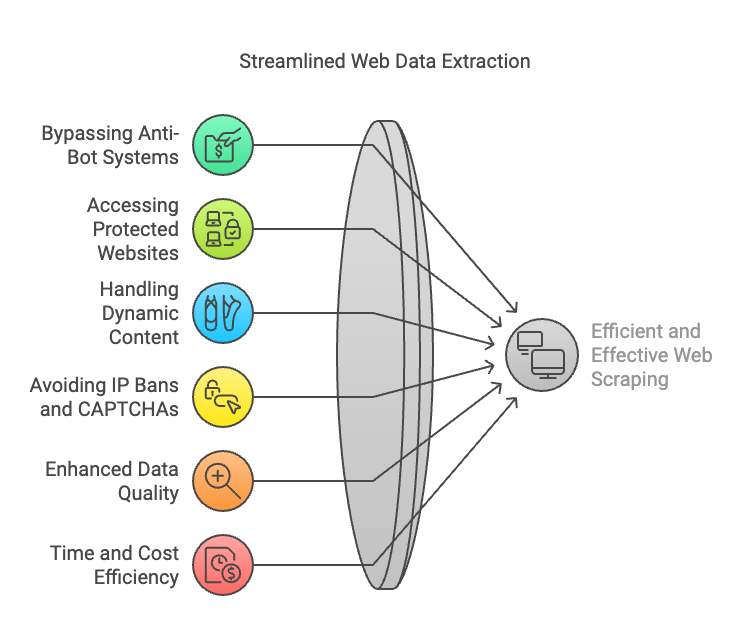

Benefits of using Stealth Mode in Selenium for Web Scraping

Stealth mode in Selenium provides critical advantages when scraping modern websites, especially those with anti-bot protections. Below are a few of the advantages of Stealth Mode in Web Scraping:

- Bypassing Anti-Bot Systems: Stealth mode tweaks browser attributes like headers, user-agent strings, and Selenium WebDriver flags, making automated scripts appear like real user activity. This reduces the likelihood of detection and blocking.

- Accessing Protected Websites: Many websites block headless browsers or Selenium scripts. Stealth mode enables access to such sites by disabling detectable elements like WebRTC and browser fingerprinting.

- Handling Dynamic Content: It ensures better interaction with AJAX-heavy or dynamically loaded content, which is common in e-commerce, travel, and social media platforms.

- Avoiding IP Bans and CAPTCHAs: By rotating user-agents and concealing automation footprints, stealth mode helps reduce CAPTCHA triggers and the risk of IP blocks.

- Enhanced Data Quality: Ensures that scraping tasks can extract complete and accurate data without disruptions caused by detection mechanisms.

- Time and Cost Efficiency: Reduces manual intervention and troubleshooting, making web scraping workflows faster and more reliable.

Setting up Selenium Stealth Mode

Setting up Selenium Stealth Mode is essential for bypassing detection mechanisms during web scraping, ensuring smoother and uninterrupted data extraction. Here is how you can set it up:

Prerequisites

Ensure the following are installed:

- Python

- Selenium

- ChromeDriver

Step-by-Step Setup Guide

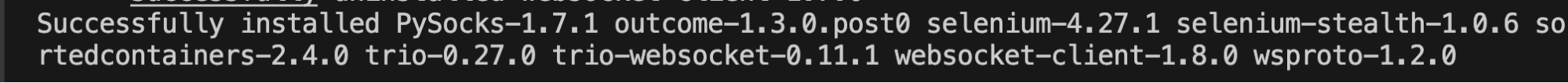

1. Install the Selenium Stealth Library

bash pip install selenium-stealth

A successful run should have output like below:

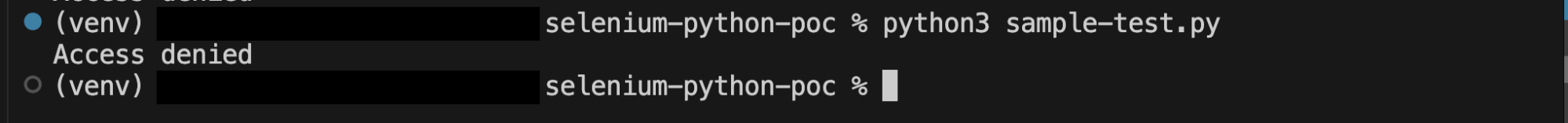

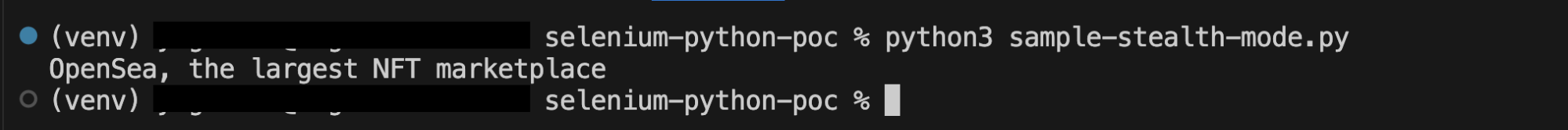

2. Create and run a simple Selenium script to open a site with anti-bot detection

from selenium import webdriver from selenium_stealth import stealth # create ChromeOptions object options = webdriver.ChromeOptions() options.add_argument('--headless') # Set up WebDriver driver = webdriver.Chrome(options=options) # Open a webpage driver.get("https://opensea.io/") print(driver.title) driver.quit()

Access will be denied as it has detection for headless browsing.

3. Integrate Stealth Mode with Selenium

Now, add stealth to bypass this check.

python

# Apply stealth mode stealth(driver, languages=["en-US", "en"], vendor="Google Inc.", platform="Win32", webgl_vendor="Intel Inc.", renderer="Intel Iris OpenGL Engine", fix_hairline=True)

After modifying browser’s header with the help of stealth, now script is able to access the site in headless mode as well.

How does Selenium Stealth Mode work?

Selenium Stealth Mode modifies browser behaviors to make automated interactions indistinguishable from real user activities. Tailoring browser attributes, headers, and network configurations bypasses anti-bot systems that detect automation tools.

These adjustments ensure smoother navigation and scraping from websites with stringent anti-bot mechanisms.

Here are some of the Stealth mode techniques to avoid detection:

1. Modifying Browser Headers

Anti-bot systems often flag requests with missing or inconsistent HTTP headers. Stealth mode updates headers like User-Agent, Accept–Language, and Referer to appear more human-like.

Code Example:

python

stealth(driver, languages=["en-US", "en"], vendor="Google Inc.", platform="Win32", webgl_vendor="Intel Inc.", renderer="Intel Iris OpenGL Engine", fix_hairline=True)

Explanation:

The languages, vendor, and platform parameters mimic real browser behaviors, ensuring headers align with typical user profiles.

2. Avoiding WebDriver Detection

Modern websites use JavaScript to detect the presence of WebDriver attributes, such as navigator.webdriver. Stealth mode disables these flags to avoid detection.

Code Example:

python

stealth(driver, webdriver=False) # Disables navigator.webdriver flag driver.get("https://opensea.io/") print(driver.execute_script("return navigator.webdriver")) # Should return None driver.quit()

Explanation:

By setting webdriver=False, the script removes the navigator.webdriver flag that typically indicates automation, making the browser appear manual.

Read More: Exception Handling in Selenium WebDriver

3. Enabling User-Agent Rotation

Using a static User-Agent can make automation scriptss predictable and easy to detect. Stealth mode rotates User-Agent strings to emulate different devices and browsers.

Code Example:

python

from fake_useragent import UserAgent # Generate a random User-Agent user_agent = UserAgent().random options = webdriver.ChromeOptions() options.add_argument(f"user-agent={user_agent}") driver = webdriver.Chrome(options=options) driver.get("https://www.whatismybrowser.com/") print(f"Using User-Agent: {user_agent}")

Explanation:

This script dynamically assigns a new User-Agent string to each browser session, making the scraping process more random and harder to detect.

4. Disabling WebRTC and Fingerprinting

WebRTC leaks and browser fingerprinting allow websites to gather unique identifiers about a device or network. Stealth mode disables these features to protect anonymity.

Code Example:

python

driver = webdriver.Chrome() stealth(driver, fix_hairline=True, # Prevents hairline feature detection renderer="Intel Iris OpenGL Engine", # Masks WebGL rendering details webgl_vendor="Google Inc.") # Masks WebGL vendor details driver.get("https://browserleaks.com/webgl")

Explanation:

Parameters like webgl_vendor and renderer mask sensitive details that can reveal automation tools, protecting against browser fingerprinting.

Code Examples for Web Scraping with Selenium Stealth Mode

Here are some of the code examples for web scraping with Selenium Stealth Mode:

1. Basic Stealth Mode Setup

This example shows how to configure Selenium with stealth mode to scrape a simple website:

python

from selenium import webdriver from selenium_stealth import stealth # Initialize WebDriver driver = webdriver.Chrome() # Apply Stealth Mode stealth(driver, languages=["en-US", "en"], vendor="Google Inc.", platform="Win32", webgl_vendor="Intel Inc.", renderer="Intel Iris OpenGL Engine", fix_hairline=True) # Open Target Website driver.get("https://opensea.io/") print(f"Page Title: {driver.title}") driver.quit()

Explanation:

- languages: Sets the browser’s language preferences.

- vendor and platform: Simulate real-world browser attributes.

- webgl_vendor and renderer: Mask GPU details to prevent browser fingerprinting.

- Output: This script loads the page and prints the title, demonstrating stealth mode’s successful integration.

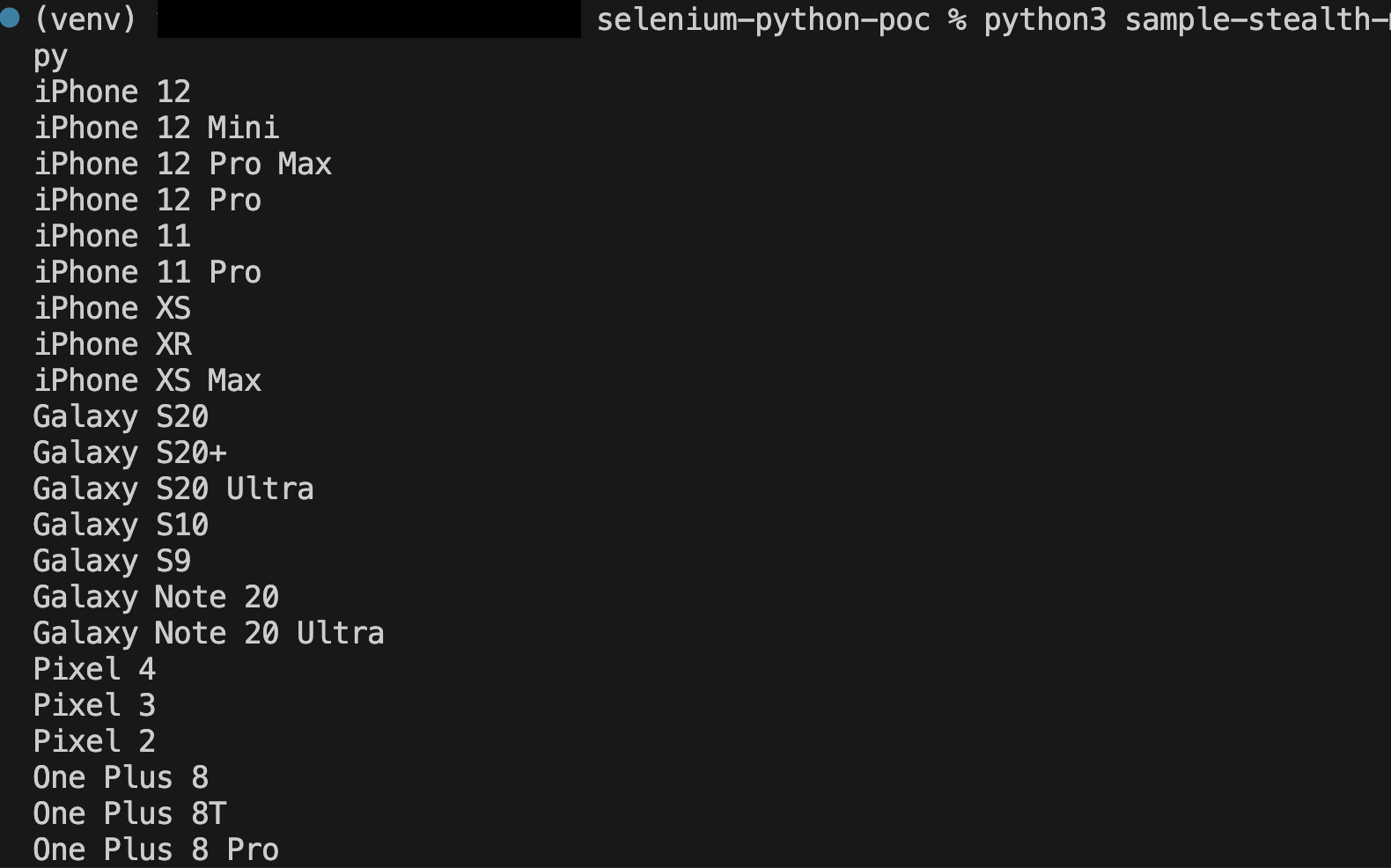

2. Scraping a Website with Anti-Bot Detection

Websites often employ anti-bot systems to detect automation. This example shows how stealth mode can be combined with additional Selenium features to extract content:

python

from selenium import webdriver from selenium.webdriver.common.by import By from selenium_stealth import stealth # create ChromeOptions object options = webdriver.ChromeOptions() options.add_argument('--headless') # Set up WebDriver driver = webdriver.Chrome(options=options) # Apply stealth mode stealth(driver, languages=["en-US", "en"], vendor="Google Inc.", platform="Win32", webgl_vendor="Intel Inc.", renderer="Intel Iris OpenGL Engine", fix_hairline=True, webdriver = False) # Open a webpage driver.get("https://www.bstackdemo.com/") # Extract product data products = driver.find_elements(By.CLASS_NAME, "shelf-item__title") for product in products: print(product.text) driver.quit()

Explanation:

- Finding Elements: The find_elements method locates all product titles with a specific CSS class.

- Real-World Scenario: Useful for scraping e-commerce sites without triggering detection systems.

Read More: Web Scraping with Beautiful Soup

Ethical Considerations for Web Scraping

Web scraping is a powerful technique, but it must be done responsibly to avoid legal and ethical issues. Here are the key points to consider:

1. Respect Website Terms of Service: Always review and adhere to the website’s terms of service before scraping. Violating these terms can result in legal action or bans.

2. Follow robots.txt Rules: Check the website’s robots.txt file to understand which pages are off-limits for bots, and configure your scraper accordingly.

3. Avoid Server Overload: Scraping too frequently can strain the website’s servers, affecting its performance. Use rate limiting and introduce delays between requests to mimic human behavior.

4. Handle Data Responsibly: Avoid collecting sensitive or personal information, and ensure compliance with data protection laws like GDPR or CCPA if applicable.

5. Respond to Restrictions Ethically: If faced with CAPTCHAs or IP bans, reconsider your scraping approach rather than aggressively bypassing these barriers.

6. Respect Content Ownership: Content on websites is often copyrighted. Using scraped content for purposes beyond fair use can lead to intellectual property disputes.

Read More: How to handle Captcha in Selenium

Advantages of using Selenium Stealth Mode

Using Selenium Stealth Mode provides significant advantages for web scraping by bypassing anti-bot detection mechanisms and ensuring smoother data extraction.

1. Improved Success Rate in Scraping Protected Websites

Websites with strict security measures, such as those employing anti-bot systems, can block standard Selenium scripts. Stealth mode effectively modifies browser behavior to mimic real user activity, bypassing these defenses.

2. Reduced Likelihood of Detection and Blocking

Stealth mode conceals telltale automation markers, such as navigator.webdriver, and adjusts headers and user-agent strings to avoid suspicion. By doing so, it minimizes the risk of being flagged or blocked during a scraping session.

3. Enhanced Ability to Scrape Complex Web Pages

Modern websites often feature dynamic elements powered by JavaScript, AJAX, or infinite scrolling. Stealth mode enables seamless interaction with such elements by emulating genuine user interactions and managing asynchronous data loading.

By improving success rates, reducing detection risks, and enhancing the ability to handle complex websites, Selenium Stealth Mode ensures reliable and efficient web scraping, even in challenging environments.

Common Challenges of using Selenium Stealth Mode and Solutions

There are several challenges that can be faced, even when using stealth with selenium for web scraping. Here are some of them:

Selenium Stealth Mode Challenges:

- CAPTCHA Challenges

- IP Bans

- Dynamic Content Issues

- Session Persistence

1. CAPTCHA Challenges

Websites may display CAPTCHAs to verify the user is human, blocking automated scripts.

Solutions:

- Use CAPTCHA-solving services like 2Captcha or Anti-Captcha.

- Add manual CAPTCHA-solving steps for occasional cases.

2. IP Bans

Repeated scraping requests from the same IP can result in bans or blocks.

Solutions:

- Rotate IPs using proxies or VPNs.

- Leverage services like BrightData or ScraperAPI for managing IP pools.

3. Dynamic Content Issues

Websites using AJAX or JavaScript to load content dynamically can delay or hide necessary elements.

Solutions:

- Implement explicit waits to ensure all elements are fully loaded.

- Use browser DevTools to inspect network activity and directly access AJAX endpoints.

4. Session Persistence

Losing session data or cookies between runs can disrupt authenticated scraping or personalized data collection.

Solutions:

- Use browser profiles to retain cookies and session data across runs.

- Save and reload cookies to maintain authenticated sessions.

By addressing these challenges with the solutions above, you can significantly enhance the efficiency and reliability of web scraping with Selenium Stealth Mode.

Alternatives to Selenium Stealth Mode

While Selenium Stealth Mode is effective, it’s not the only solution for bypassing anti-bot measures. Several alternatives offer similar or advanced capabilities depending on your requirements.

Alternatives to Selenium Stealth Mode:

- Puppeteer with Stealth Plugins

- Dedicated Web Scraping Tools (for example, Scrapy)

- Specialized Proxy Services with Built-in Anti-Bot Features

- Selenium’s Built-in Capabilities

1. Puppeteer with Stealth Plugins

Puppeteer, a headless browser automation tool for Node.js, is widely recognized for its simplicity and power. Adding stealth plugins, like puppeteer-extra-plugin-stealth, enhances Puppeteer’s ability to bypass detection.

- Benefit: Offers robust anti-detection mechanisms tailored for JavaScript-based scraping.

- When to Choose: Ideal for projects requiring advanced JavaScript execution or extensive DOM manipulation

2. Dedicated Web Scraping Tools (for example, Scrapy)

Scrapy is a Python-based web scraping framework designed for scalable and efficient data extraction. While not inherently stealthy, it integrates well with proxy services and user-agent rotation libraries.

- Benefit: Excellent for large-scale scraping projects requiring speed and scalability.

- When to Choose: Suitable for structured websites and high-volume scraping needs.

3. Specialized Proxy Services with Built-in Anti-Bot Features

Proxy services like BrightData, Oxylabs, and ScraperAPI provide advanced anti-detection capabilities, including IP rotation, geotargeting, and CAPTCHA bypass.

- Benefit: Eliminates the need for complex scripting by handling detection and blocking automatically.

- When to Choose: Ideal for non-technical users or projects with stringent time constraints.

Read More: How to set Proxy in Selenium

4. Selenium’s Built-in Capabilities

For basic anti-detection requirements, Selenium itself can be customized to include user-agent rotation, proxy configurations, and header modifications.

- Benefit: Reduces dependency on external tools while leveraging existing Selenium setups.

- When to Choose: Suitable for simpler websites with minimal bot defenses.

Choosing Between Selenium and Puppeteer

Choosing between Selenium and Puppeteer depends on your testing and automation needs, as both tools offer unique features tailored for different scenarios. Here are the differences between them:

| Feature | Selenium | Puppeteer |

|---|---|---|

| Programming Language | Multi-language support (for example, Python, Java, C#) | JavaScript (Node.js) |

| Anti-Detection Plugins | Requires external libraries like selenium-stealth | Built-in stealth plugins available |

| Ease of Use | Moderate setup complexity | Easier to configure with plugins |

| Use Case | Legacy applications and multi-language projects | Modern JavaScript-heavy websites |

Why run Selenium Tests on BrowserStack?

BrowserStack Automate is a cloud-based platform that significantly enhances Selenium testing by offering a scalable, reliable, and comprehensive environment for executing automated tests.

Here’s why it’s a preferred choice for Selenium users:

1. Access to Real Devices and Browsers: BrowserStack provides instant access to over 3,500 real devices and browser combinations, enabling comprehensive cross-browser and cross-platform testing.

2. Eliminate Local Setup Complexities: Running Selenium tests locally often involves maintaining multiple browser versions and operating systems, which can be time-consuming and error-prone.

3. Support for Parallel Testing: It enables parallel test execution, allowing you to run multiple test suites concurrently.

4. Seamless CI/CD Integration: Integrate with popular CI/CD tools like Jenkins, GitHub Actions, and CircleCI to automate and streamline your testing pipeline for continuous delivery.

Conclusion

Selenium stealth mode is a powerful technique for enhancing web scraping capabilities. By mimicking human behavior, it bypasses anti-bot systems effectively, enabling data extraction from even the most protected websites.

However, ethical considerations are paramount, so always respect website rules and avoid overloading servers.

To ensure seamless and scalable automation, consider leveraging tools like BrowserStack Automate, which enhance Selenium’s capabilities across real devices and browsers.