Software reliability is essential for user trust and satisfaction. It ensures that software functions consistently under expected and unexpected conditions.

Overview

What is Reliability Software Testing?

Reliability testing evaluates a software application’s stability and performance under varying conditions to ensure consistent functionality. It focuses on the application’s overall dependability, especially for critical systems, rather than just individual components.

Benefits of Reliability Testing:

- Provides better user experiences and strengthens brand perception.

- Saves money by resolving issues early rather than post-release.

- Ensures adherence to industry standards and avoids quality lapses.

- Builds trust by confirming software reliability in tough scenarios.

- Fosters customer retention, leading to higher sales potential.

- Frees up resources for innovation by reducing time spent on troubleshooting.

Types of Reliability Testing:

- Stress/Load Testing: Tests performance under normal and peak user loads.

- Regression Testing: Verifies new changes don’t disrupt existing functionality.

- Fault Injection Testing: Assesses the system’s ability to recover from unexpected failures.

- Endurance Testing: Evaluates long-term stability under sustained use.

This article covers what reliability testing is, its benefits, scenarios, types, and steps to perform it.

What is Reliability Testing?

Reliability testing evaluates a software application’s stability and performance under varying conditions to ensure consistent functionality. It focuses on the application’s overall dependability, especially for critical systems, rather than just individual components.

In quality assurance (QA), reliability testing evaluates and improves an application’s dependability, focusing on its consistent performance within a broader system, especially for critical operations.

Why perform Reliability Testing?

Reliability testing ensures software stability by identifying load, stress, and fault tolerance issues, ensuring consistent performance under varying conditions.

Key benefits include:

- Enhanced Customer Satisfaction: Reliable software leads to better user experiences and improved brand perception.

- Reduced Development Costs: Early identification and resolution of issues saves costs compared to fixing post-release.

- Improved Compliance: Ensures software meets industry standards and regulations by preventing quality compromises.

- Increased Confidence: Builds user and stakeholder confidence by confirming software performs as expected, even in challenging scenarios.

- Increased Revenue: Reliable software fosters trust, leading to customer retention and potential for higher sales.

- Enhanced Productivity: Minimizes troubleshooting, allowing teams to focus on innovation and development.

- Competitive Advantage: Offering highly reliable software sets your product or service apart in the market.

- Long-term Cost Savings: Reduces overall costs by minimizing defects, downtime, and ongoing support needs.

Reliability Testing Examples

Reliability testing examples illustrate how software maintains stability and performance under various conditions. These examples help ensure that applications can handle failures, load stress, and unexpected scenarios, providing a consistent user experience.

- Unit testing is code-based testing, such as JUnit tests for Java. It can help identify and isolate bugs at the level of software modules or units.

Most useful is when mid- to large-sized teams work on products with strong adherence to syntactic and packaging guidelines for the product(s)/service(s). - Visual regression testing is for user experience consistency over time and versions, For example, Percy web regression testing helps ensure layout consistency.

There are manual and automated ways to implement this as a front-end product reliability test. - Performance testing is essential for analyzing the impact of your app runtime on device, network, power, etc. For example. BrowseStack Automate performance metrics for capturing mobile device behavior with automated test scripts written in your preferred programming language and test framework.

- Accessibility testing for improved support with automated and disabled agents.

For example, screen reader testing, voice assistant testing, etc., let agents scan and parse content properly for distribution, translation, and contextualization. They are great tools for reliably maintaining accessibility standards in products. - Security testing for the most mission-critical flows, like authentication, payments, etc., through manual or automated efforts.

Benefits of Reliability Testing

The advantages of incorporating reliability testing extend beyond technical aspects:

- Increased Revenue: Reliable software builds trust, leading to greater customer retention and potential for increased sales.

- Enhanced Productivity: Reduce the time and effort spent troubleshooting and fixing issues, allowing teams to focus on development and innovation.

- Competitive Advantage: Delivering highly reliable software can differentiate your product or service in the market.

- Long-term Cost Savings: Reduce development, maintenance, and support costs by minimizing defects and downtime.

Read More: Core Benefits of Automation Testing

What are the Types of Reliability Testing?

Reliability testing generally can be broken into these categories:

- Stress/Load Testing: Evaluating performance under expected and peak user loads. Pushing the system beyond its normal operating limits.

- Regression Testing: Ensuring new code changes or updates don’t negatively impact existing functionality.

- Fault Injection Testing (also known as Fault Tolerance Testing): Evaluating the system’s ability to handle unexpected failures gracefully and recover.

- Endurance Testing (also known as Soak Testing): Assessing the system’s long-term stability and performance under sustained load.

Read More: Types of Software Testing

Key Considerations for Effective Reliability Testing

Reliability testing plays a vital role in ensuring long-term software performance. By strategically considering key factors, even when balancing costs and resources, you can develop an efficient and targeted testing plan.

Key Factors to Consider:

- Product Ownership: Who is developing the product and what are their goals?

- Objective Alignment: How do these goals align with overall project objectives?

- Budget Constraints: What financial resources are available for reliability testing?

- Failure Detection: What tools and capabilities exist to detect failures?

- Data Insights: What actionable insights can be drawn from existing data?

- Risk Coverage: Where are the potential reliability blind spots, and how are they addressed?

For example, an online banking app prioritizes reliable transactions and security over performance speed, while a mobile game focuses on smooth gameplay and crash prevention, showing how reliability priorities vary by purpose

This approach will vary based on the product’s goals, nature, and technical environment.

Reliability Testing Scenarios

Here are some realistic reliability testing types and corresponding test cases:

1. Load/Stress Testing

Goal: Identify breaking points, assess system resilience, and evaluate recovery mechanisms after failure.

Sample Test Cases:

Websites

- Flood the website with traffic far exceeding expected capacity.

- Simulate a denial-of-service (DoS) attack.

- Deprive the website of critical resources (For example, database connection and memory) and observe its behavior.

Web Services

- Subject the API to an extremely high request volume over a short period.

- Send malformed or invalid requests to test error handling.

- Evaluate the API’s ability to gracefully degrade under extreme stress.

Mobile Apps

- Push the app to its limits by performing resource-intensive tasks (For example, large file processing complex calculations) while simultaneously using other features.

- Simulate low memory and storage conditions to observe the app’s response.

- Test the app’s behavior when network connectivity is interrupted or unstable.

2. Regression Testing

Goal: Maintain software stability and prevent the introduction of new bugs or regressions during development.

Sample Test Cases:

Websites

- Re-run existing functional test suites after every code deployment.

- Test all critical user flows (For example, registration, login, checkout) to ensure they function correctly after changes.

- Pay close attention to areas of the website that are frequently updated.

Web Services

- Re-execute API tests for all existing endpoints after code changes.

- Validate data integrity and consistency across different API versions.

- Ensure backward compatibility with older clients or integrations.

Mobile Apps

- Run automated UI tests that cover the app’s core functionality after each release.

- Test backward compatibility with older versions of the app and operating systems.

- Verify app data migration after updates.

3. Failure Injection Testing

Goal: Ensure the system can gracefully handle various types of failures and resume normal operation quickly.

Sample Test Cases:

Websites

- Simulate database connection failures and observe how the website handles them.

- Introduce errors in third-party API integrations to check for appropriate error messages or fallback mechanisms.

- Test the website’s recovery process after a server crash or power outage.

Web Services

- Inject network latency or timeouts into API requests to test error handling and retry mechanisms.

- Simulate server crashes or database unavailability to assess fault tolerance.

- Evaluate the API’s ability to handle unexpected data inputs or format errors.

Mobile Apps

- Simulate a sudden loss of network connectivity and observe how the app handles offline scenarios.

- Inject errors during data synchronization or background tasks to test error handling and data recovery.

- Test the app’s ability to gracefully handle device crashes or unexpected system interruptions.

4. Endurance Testing

Goal: Detect issues like memory leaks, resource exhaustion, or performance degradation that might only emerge over time.

Sample Test Cases:

Websites

- Run the website under a moderate load for an extended duration (For example, 24 hours, 7 days).

- Monitor server logs and system resource usage during the test.

- Web Services Continuously send requests to the API at a moderate rate for a prolonged period and track its performance metrics (response time, error rate).

Mobile Apps

- Run the app continuously for an extended period, simulating various user interactions and background tasks.

These are just some illustrative examples. The specific test cases you choose will depend on your application’s features, target audience, and business requirements. Reliability testing is iterative. Continuously refine your tests based on results and user feedback.

Automation is crucial for efficient and comprehensive reliability testing. Tools like BrowserStack can help you automate tests across a wide range of devices and platforms.

What are the common Reliability Testing Metrics?

Several metrics are used to measure and track software reliability:

- Mean Time Between Failures (MTBF): The average time elapsed between system failures.

- Failure Rate: The number of failures per unit of time (For example, failures per hour).

- Availability: The percentage of time the software is operational and accessible.

- Mean Time To Repair (MTTR): The average time required to fix a failure and restore the system.

- Defect Density: The number of defects found per line of code.

- Customer-reported Issues: The number of bug reports or complaints received from users.

Steps to perform Reliability Testing

Here’s how to perform reliability testing to ensure software resilience and stability across different conditions. Follow these key steps to effectively test and validate reliability for critical software functions.

Step 1: Modeling

Define the system’s operational profile, identifying expected usage patterns, environmental factors, and potential failure modes.

Example: eShop could map out customer activity patterns, such as peak traffic on weekends, and assess device and network loads. By understanding how customers interact with the app, they can simulate accurate usage scenarios.

Step 2: Measurement

Execute tests based on the defined model, collect data on failures, and measure relevant metrics (MTBF, availability, etc.).

Example: eShop could run tests that mimic user behavior during high-traffic periods and track the app’s response, noting any failures or slowdowns. Automated scripts can help monitor and log these metrics in real-time.

Step 3: Improvement

Analyze the collected data, identify the root causes of failures, implement improvements to enhance reliability, and repeat the testing process.

Example: After testing, eShop’s team might compare actual performance against the expected metrics. If the app crashes more frequently than anticipated, they can investigate root causes—like server strain—and make targeted fixes before running another round of tests to confirm improvements.

Approaches used for Reliability Testing

Various testing approaches are employed to assess different aspects of reliability:

- Regression Testing: Identifying unintended side effects of changes through repeated testing.

- Test Environment Variation: Evaluating the software’s behavior in different environments (operating systems, browsers, hardware configurations).

- Load Testing: Simulating high user loads to identify performance bottlenecks and ensure system stability.

- Stress Testing: Pushing the system beyond its normal operating limits to determine its breaking point.

- Failure Injection Testing: Deliberately introducing failures to observe the system’s recovery mechanisms.

Read More: Stress Testing vs Load Testing

What are the Factors influencing Software Reliability?

Many factors can influence the reliability of software:

- Software Architecture: A well-designed architecture with clear modules and components enhances reliability.

- Technology Stack: The choice of programming languages, libraries, and frameworks can impact reliability.

- Feature Complexity: More complex features tend to have a higher probability of containing defects.

- Device and Platform Diversity: Ensuring compatibility and consistent performance across several devices and platforms is crucial for reliability.

- Communication Protocols: Robust and reliable communication protocols are essential for systems that interact with external services or devices.

- External Factors: Environmental conditions, user behavior, and unexpected events can also affect software reliability.

Best Practices for Reliability Testing

To maximize the effectiveness of reliability testing:

- Automate Testing: Automate repetitive test cases to improve efficiency and coverage.

- Vary Test Environments: Test across a wide range of operating systems, browsers, devices, and network conditions.

- Collect and Analyze Data: Track reliability metrics diligently and utilize data analysis to identify trends and prioritize improvements.

- Leverage AI and Machine Learning: Explore using AI-powered tools for test case generation, anomaly detection, and root cause analysis.

- Incorporate Feedback Loops: Gather feedback from users and testers to identify and address real-world reliability issues.

- Continuous Improvement Cycle: Embrace a continuous improvement mindset by regularly reviewing your testing strategy and incorporating learnings into future development cycles.

- Value Collaboration: Encourage open communication and knowledge sharing to promote a culture of quality and reliability within your startup.

Why Perform Reliability Software Testing on Real Devices

Virtualization undeniably provides significant advantages, especially for startups and smaller teams. However, virtual environments are not without their shortcomings:

- Incomplete Replication: Emulators and simulators can’t fully mimic the intricacies of real-world devices, including hardware quirks, network conditions, and sensor interactions.

- Limited Accuracy: Testing solely on virtual environments can lead to inaccurate results, as performance and behavior may differ significantly on actual devices.

- Missed Edge Cases: Virtualization might overlook critical issues related to device-specific hardware or software interactions, potentially resulting in undetected bugs.

- The Role of Real Device: Real device cloud provides access to a huge collection of physical devices for testing, bridging the gap left by virtualization:

- Real-World Accuracy: Testing on real devices ensures that your application is tested under authentic conditions, leading to more reliable results.

- Uncovering Edge Cases: Real devices expose device-specific issues that might be missed in virtual environments, ensuring higher quality and user satisfaction.

- Critical for Specific Tests: Checking performance under different network conditions or measuring battery consumption requires real devices to provide accurate results.

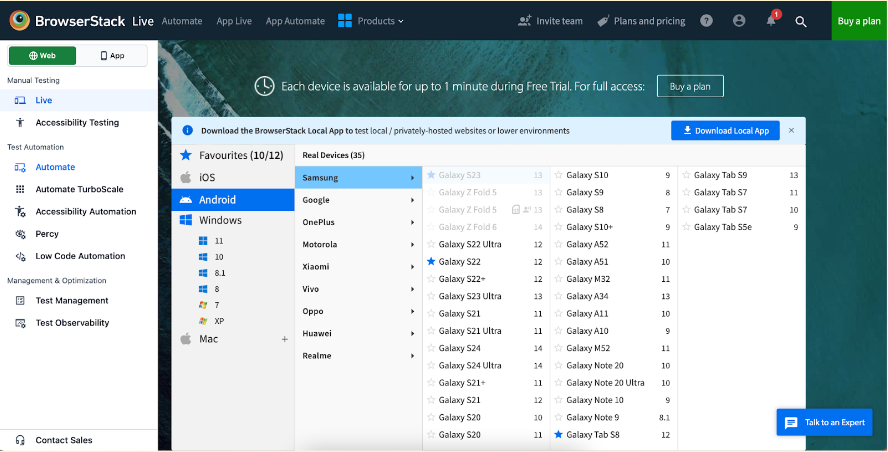

With BrowserStack Live and BrowserStack Automate, you can easily conduct real device testing across various mobile devices and browsers without needing a physical device lab. Key benefits include:

- Access to 3,500+ Real Devices: Test on a wide range of devices, from the latest models to older ones, ensuring maximum coverage for your target audience.

- Hassle-Free Testing: No setup or maintenance required—start testing on real devices with just a few clicks.

- Automated Testing at Scale: Leverage BrowserStack Automate to run automated test scripts on real devices, enabling you to test reliability on a large scale quickly and efficiently.

- Comprehensive Debugging Tools: Real-time debugging with integrated tools allows you to quickly identify and fix issues that only appear on specific devices.

Conclusion

Reliability software testing is essential for delivering high-quality, user-friendly applications. By implementing a robust testing strategy, incorporating best practices, and leveraging tools like BrowserStack, you can significantly enhance the reliability of your software, build user trust, and achieve lasting success in the market.