What is Model-Based Testing in Software Testing

By TechnoGeek, Community Contributor - November 6, 2024

Model-based testing utilizes abstract models for designing, automating, and executing test cases to change how software quality is ensured fundamentally. It uses models to reproduce a full, systematically derived set of test cases that can capture complex scenarios, detect possible defects early, and so on.

This improves testing efficiency and coverage, and reduces the chance of crucial issues slipping through. This approach reshapes test automation and quality assurance.

This guide explores model-based testing in detail, covering its methods, benefits, challenges, and best practices.

- What is Model-Based Testing?

- Importance of Model-Based Testing

- When to Choose Model-Based Testing

- How does Model-Based Testing work?

- Types of Model-Based Testing

- Popular Model-Based Testing Tools in the Market

- Best Practices for Model-Based Testing

- Advantages of Model-Based Testing

- Challenges of Model-Based Testing

- Common Misconceptions About Model-Based Testing

- Frequently Asked Questions (FAQs)

What is Model-Based Testing?

Model-based testing is a testing approach that generates test cases from abstract models of system behavior focusing on requirements without deep-diving into detailed code. In Model-Based Testing, a “model” refers to a representation of the system under test (SUT), capturing its behaviors, workflows, inputs, outputs, and key states.

Models are built using different methods, that involve state-transition diagrams, dependency graphs, and decision tables. This model-driven approach facilitates automated test generation, where test cases evolve as models change.

It guides the testing process, seeing to it that each test case projects the expected system behavior across different conditions.

With Model-Based Testing, testers can define high-level actions and outcomes, such as “Add Contact” or “Save File,” without getting bogged down by the specifics of each interaction.

This gives rise to a standardized process for test generation and keeps test maintenance manageable when system changes occur.

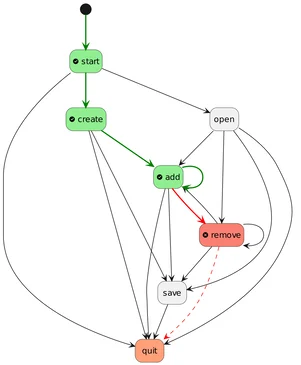

Example of Model-Based Testing

Consider a simple address book application. The main actions can include:

- Start the application

- Add or remove contacts

- Save and open files

- Quit the application

Source: QT

Instead of writing separate tests for each action, Model Based Testing creates a state-transition model that represents different system states (e.g., “File Opened,” “Contact Added”) and the actions that transition the application between these states.

Test cases are automatically generated to validate these transitions with model-based testing tools. For example, one test case could verify that adding a contact allows the file to be saved, while another confirms that quitting the application exits to the main menu.

Read More: Test Case Review Process

This automated process ensures enhanced coverage of real-world user conditions and speeds up testing, particularly when requirements change.

Importance of Model-Based Testing

Here’s why model-based testing is significant in software testing:

- Early detection of bugs: By covering diverse scenarios, Model-Based Testing detects bugs early, enhancing product quality and reliability. This approach is ideal for teams facing complex applications with frequent updates, as it ensures all critical workflows are consistently tested.

- Automated Test Generation: The system models can help automatically generate various test cases as they represent the logic and expected outcomes. This is really useful when it comes to regression testing, as models ensure thorough re-evaluation.

- Maximized Test Coverage: Model-based testing systematically explores different scenarios by generating test cases with respect to a comprehensive model. This will cover a larger group of possible inputs, actions, and states that could be overlooked if tests were written traditionally.

- Improve Accuracy and Consistency: Test cases generated from a model are derived from specifications and therefore tend to have increased accuracy and consistency, eliminating the risks of human errors.

When to Choose Model-Based Testing

Model-Based Testing is very successful in specific instances. You can choose Model-Based Testing in situations like:

- Complex Systems: Ideal to use for applications that exhibit complex behavior and a large number of states.

- Evolving Requirements: Ideal for projects in which the requirements change. In this case, test cases can be readily updated.

- Comprehensive Coverage Needed: It is necessary when applications are mission-critical and need to be tested across various user scenarios.

- Interdisciplinary Team Collaboration: In cases where expertise from domain experts, developers, and even testers is incorporated.

- Automation Opportunities: It automatically generates and executes test cases by saving man-hours.

- Budget Constraints: Can act as an initial investment that can eventually pay back through efficient optimization.

One must avoid Model Based Testing when:

- Simple Applications: The process is an overkill for applications of lower complexity.

- Limited Resources or Expertise: In the practical field, it may not work well if the team does not have modeling expertise or resources.

How does Model-Based Testing work?

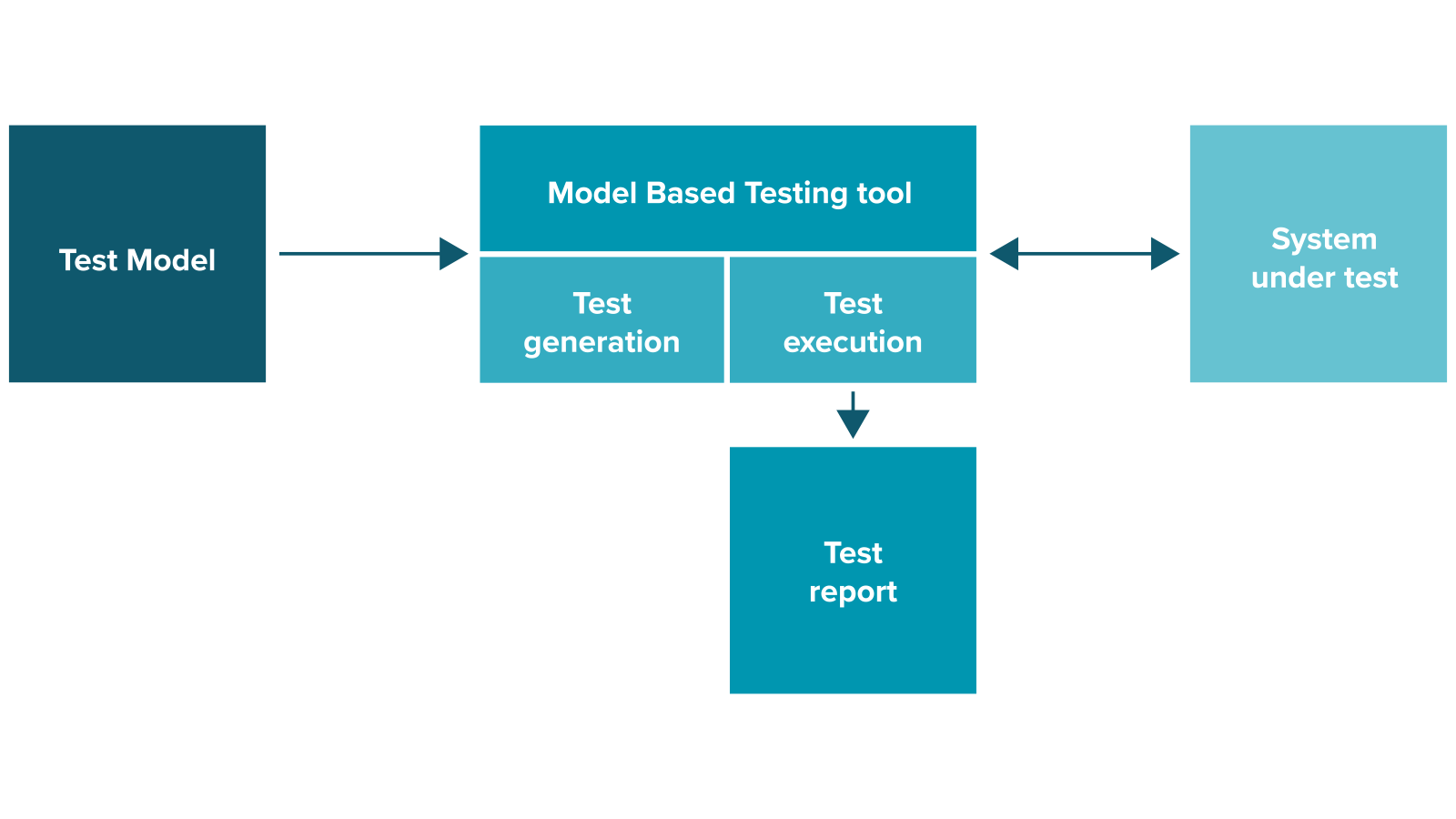

Source: ICT

Model-Based Testing uses an abstract model of the system under test (SUT) to help automate the generation of test cases, with a focus on capturing its behavior and expected outcomes.

Here is a summary of how Model Based Testing works:

1. Model Creation: An abstract model is developed to represent the expected behavior of the system under test, mostly in the form of a state machine, flowcharts, or decision tables. Domain experts, developers, and testers collaborate to ensure that all the finer aspects of the requirements have been taken into account by the model.

2. Validation of the Model: Reviews and simulations are applied to validate the model. The model is checked to ensure the system behavior desired is captured and if reflections made from it need refinement.

3. Generate Test Cases: Automated test cases are generated based on the state, transition, and input of the models with maximum coverage for different scenarios in that model.

4. Execute the Tests: Testers execute the generated test cases against the system under test, providing inputs and observing system responses, either manually or using automated tools.

5. Compare Results: The observed behavior is compared with the expected outcomes suggested in the model. Discrepancies point out the potential defects or issues in the software.

6. Report Defects: Any identified defects are documented in a report that details the issue and its context. Model Based Testing’s early detection of defects allows for timely resolution.

7. Maintain and Iterate the Model: As the system under test evolves, the model is updated to reflect changes. New test cases are generated to ensure ongoing alignment with the current system state, facilitating continuous improvement.

Example: Working of Model based Testing

For a login function, the model could have states like “Enter Username” and “Click Login,” with outcomes such as “Success” or “Failure.”

Test cases evaluate scenarios like incorrect passwords or blank fields, to verify the system’s behavior against expectations.

By streamlining test case generation and execution, Model Based Testing improves efficiency and effectiveness in software testing, helping quality assurance teams function better.

Read More: How to Write Test Cases for Login Page

Types of Model-Based Testing

Here are the main types of model-based testing:

- Finite State Machine Testing: This is very appropriate for systems that have clearly identifiable states and transitions, like embedded systems. It tests for transitions in the state and behavior so the system reacts correctly in a given state.

- Statecharts: Statecharts are an extension of FSMs, dealing with complex transitions, parallelism, and hierarchies; in particular they appear to be helpful for reactive systems, such as industrial machinery where complex state behaviors need to be verified.

- Decision Table Testing: Decision tables simplify complex business logic by defining conditions and actions. Thus ensuring full coverage of input combinations, that is essential for rule-based systems like financial applications.

- Unified Modeling Language (UML) Testing: UML diagrams model system interactions, enabling test case generation that checks if a system meets expected behaviors. It is useful for applications requiring detailed interaction tracking, such as CRM systems.

- Markov Model Testing: Markov Model Testing is used for systems with probabilistic state transitions. This approach tests reliability under varied probabilities, making it ideal for applications where probabilistic outcomes affect functionality (example; network protocols).

- Data Flow Testing: This technique tracks data from input to processing and output, verifying data accuracy and handling data-heavy systems like databases.

- Scenario-Based Testing: Based on real-world scenarios or user stories, this technique ensures web or mobile applications meet user expectations and provide a seamless experience.

Popular Model-Based Testing Tools in the Market

Here’s an overview of some popular Model-Based Testing tools used nowadays:

- Spec Explorer: Leverages state charts and activity diagrams to create system behavior models and generate test cases automatically.

- Parasoft SOAtest: Helps to test SOA, web services, and APIs, with numerous functionality for automated performance, and security testing.

- IBM Rational Test Workbench: Supports functional, performance, and security testing with the ability to create tests from UML models.

Read More: Model-Based Testing Tools

- UModel: Generates test cases, supports recording/replaying scripts, and enhances model-based test case creation.

- Robot Framework: Being open-source and keyword-driven, it can create tests from UML models, natural language specs, and spreadsheets.

- GraphWalker: Focuses on graph-based test modeling for functional testing, regression testing, and integration testing through visual test case generation.

- Conformiq: Helps to provide automated test generation from graphical models, ideal for complex scenarios and extensive coverage needs.

- Modbat: Uses state-based modeling to generate tests that cover various scenarios and edge cases efficiently.

- Worksoft Certify: A no-code test automation tool that supports cross-application testing for business-critical processes.

- Parasoft CTP: Manages test environments, tests data, and integrates with CI/CD, suitable for distributed and microservices architectures.

- BPM-X: Helps align business processes with IT systems by generating test cases from BPM models and ensuring process validation and compliance.

Note: For in-depth information about these tools and their features, check out this detailed guide on model-based testing tools.

Best Practices for Model-Based Testing

To maximize Model Based Testing’s effectiveness, consider these best practices:

| Best Practice | Approach | Benefits |

|---|---|---|

| Start with Simple Models | Begin by modeling basic functionalities to introduce the team to Model-Based Testing gradually. | Builds confidence and establishes a foundation for handling more complex models. |

| Engage Stakeholders at an early stage | Involve developers, testers, and business analysts at the onset. | Ensures the model accurately reflects requirements, reducing potential issues during the testing process. |

| Regular Model Validation | Continuously validate and update the model throughout the development lifecycle. | Maintains model relevance and accuracy, ensuring test cases remain effective as requirements evolve. |

| Prioritize Tool Selection and Integration | Choose Model-Based Testing tools that integrate well with current development and testing environments. | Streamlines the Model-Based Testing process, making adoption easier and more effective within existing workflows. |

| Focus on Model Maintainability | Design models and test cases to be easily maintainable as the system evolves. | Ensures long-term efficiency and adaptability, allowing the Model-Based Testing process to evolve with system changes. |

Advantages of Model-Based Testing

Here are six key advantages of Model-Based Testing that make it a powerful testing strategy for quality assurance teams:

- Efficiency and Automation: Model-based testing improves test automation and saves a lot of time and effort put into manual testing. It automates the generation of test scenarios from abstract models and lets the teams focus on high-level tasks.

- Comprehensive Test Coverage: Model-based testing systematically tests a system’s various states, transitions, and potential edge cases, enhancing test coverage across scenarios that manual testing might miss. The model-driven approach ensures that both positive and negative scenarios are included, allowing teams to capture a broad range of potential issues in the testing phase.

- Early Defect Detection: Model Based Testing really enables shift-left testing, meaning that one can start testing as early as the requirements phase. It allows the earliest possible exposure of discrepancies and saves costs and effort by catching the defects before they reach development late in the cycle. It also leads to better-quality software and a smoother development process.

- Reusability and Maintenance: Once a model is created, it can be reused for various testing phases, such as integration and unit testing, and even across different projects. This reusability saves valuable time and effort. Additionally, the modular nature of Model Based Testing allows testers to make updates to the model when system requirements change instead of reworking individual test cases. This makes maintaining test cases much easier and less time-consuming.

- Consistency and Reproducibility: Tests generated from models are consistent and reproducible, which is essential for effective regression testing. Model-Based Testing ensures that test cases remain aligned with the system’s specifications and any defects detected are reliably documented and tracked over subsequent runs. Consistency helps teams avoid gaps in testing and enhances confidence in software releases.

- Enhanced Communication and Collaboration: Models represent the system graphically and help teams keep clear communication. This is useful for complex systems, where teams should get aligned on requirements as well as testing objectives. Model-Based Testing allows developers, testers, and stakeholders to work out a common understanding, reducing occasions of miscommunication instead of helping settle issues quickly.

Challenges of Model-Based Testing

Model-based testing also comes with a few challenges:

- High Initial Investment: Implementing Model Based Testing requires upfront investment in specialized tools, training, and time for model creation. For smaller teams or projects with limited budgets, this initial cost can be a significant barrier.

- Complex Model Maintenance: As systems change, so must the models. To keep them up-to-date, the models must be updated as necessary, which can add to the time and cost of the test process.

- Skill and Expertise Requirements: Model-based testing depends on abstract thinking and system modeling skills, which not all testers may possess. Additional training is often needed, making it difficult for teams without expertise in modeling to adopt Model-Based Testing effectively.

- Limited Scope for Non-Functional Testing: Model Based Testing mainly targets functional testing and may not address non-functional requirements like performance, security, or usability. This can lead to gaps in test coverage if additional testing strategies aren’t implemented.

- Risk of Over-Dependence on the Model: Over-reliance on Model-Based Testing can lead teams to neglect other test approaches, such as exploratory testing or real-world scenario testing. This may result in missed edge cases and reduce the overall effectiveness of the testing effort.

Common Misconceptions About Model-Based Testing

Here are a few common misconceptions about model-based testing:

- Model-Based Testing Replaces All Other Testing Methods: Most people believe that Model Based Testing is the ultimate substitute for other testing methodologies. Model Based Testing is, however, most effective when used together with other testing methods like exploratory or performance testing for achieving comprehensive test coverage.

- Model-Based Testing is a Quick Fix for All Testing Challenges: While Model-Based Testing can automate test case generation, developing and maintaining accurate models takes time and expertise. It may not be the best fit for simpler projects due to the initial setup investment required.

- The Model Guarantees Perfect Testing Coverage: The effectiveness of Model-Based Testing depends on the accuracy of the model. If the model is flawed, it can lead to missed defects. Continuous review and updating of the model are essential for effective testing.

- Model Based Testing is Only for Complex Systems: Although Model Based Testing is particularly beneficial for complex systems, it can also add value to simpler projects by providing consistent coverage and automating test case generation.

- Anyone Can Use Model Based Testing Tools Effectively: Success with Model Based Testing requires an understanding of modeling techniques, the system under test, and the Model Based Testing tool itself. Adequate training and expertise are necessary to fully leverage Model Based Testing’s capabilities.

- Model-Based Testing Only Tests User Interfaces: While Model-Based Testing primarily focuses on functional behavior, it is not limited to UI testing. Some tools can also handle backend or API testing, ensuring a broader application of Model-Based Testing

- Model Based Testing Eliminates Human Involvement in Testing: Although Model Based Testing automates aspects of testing, human expertise remains vital. Testers still need to analyze results, identify issues, and update models as necessary, requiring collaboration among team members.

- Model Based Testing is Only Suitable for Traditional Software Development: Model Based Testing can be adapted to Agile methodologies, allowing for incremental model updates and test generation. It is applicable in diverse contexts, including web applications and embedded systems.

- Model Based Testing is Too Complex to Implement: While the setup process can be intimidating at first, most modern Model Based Testing tools simplify it, thereby making it easier for teams to understand and integrate Model Based Testing into their workflows seamlessly.

- Model-Based Testing is Outdated: Some may view Model Based Testing as an old-fashioned approach tied to waterfall methodologies. However, Model-Based Testing has evolved and can effectively support modern, iterative development practices and collaborative testing environments.

Conclusion

Model-based testing is a structured approach to software testing, allowing teams to make significant improvements in testing efficiency and coverage.

Abstract models are utilized to automatically generate test cases so as to enable different scenarios being heavily tested. This is beneficial for complex, dynamic software systems subject to changing requirements, whose comprehensive testing is a substantial assurance of quality.

Model-Based Testing is definitely not a silver bullet; its strengths really work out in collaborative environments where input from domain experts, developers, and testers can be leveraged. For relatively trivial applications, modeling overheads could be translated into unwarranted complexity.

Combine model-based testing with real-device testing for enhanced comprehensive testing. While Model Based Testing ensures thorough coverage by generating test cases relying on the system’s behavior, real-device testing verifies these cases in authentic environments that reflect real user conditions.

BrowserStack can be an ideal tool for this as it offers a vast real-device cloud with access to 3500+ real-device browsers and OS combinations.

Frequently Asked Questions (FAQs)

1. What is a model-based technique?

A model-based technique involves creating abstract representations of a system to guide testing, allowing automated test generation based on predicted system behavior.