Pytest coverage report is a code coverage report produced by the pytest-cov plugin and coverage.py library. It studies the percentage of code executed using Pytest.

Code coverage describes the extent to which a test suite has tested a codebase. It often reveals the lines of code that have been tested and those that have been skipped during testing.

A codebase with high code coverage scores inspires developers’ confidence, helps identify redundant code, and improves code maintainability.

This tutorial will cover what code coverage is, how to generate a Pytest coverage report, Pytest code coverage tools, etc.

What is Code Coverage?

Code Coverage measures the percentage of code in a given codebase that the executed test suite has tested. It calculates the ratio of code tested over the total source code and presents it in percent. Developers can thus identify parts of their application that have not been sufficiently tested.

Read More: Code Coverage vs Test Coverage

Importance of Code Coverage Reports

Here are the main reasons to rely on code coverage reports:

- Code Refactoring: Code coverage reports reveal parts of the source code that need refactoring to improve readability and efficiency.

- Identify Untested Code: Redundant code within a particular codebase skipped during testing can be identified and eliminated.

- Faster Debugging Process: An application’s bugs or faulty lines of code can be detected early and fixed before it goes live.

- Quality Assurance: Code coverage reports with high scores indicate that thorough testing was performed on a piece of software.

- Benchmark for Development Standard: High scores in code coverage are vital for setting and maintaining the quality of code across team members. It can also be a reference point for improvement.

Read More: Code Coverage Techniques and Tools

What is Pytest Coverage Report?

This is a code coverage report generated by the pytest-cov plugin and coverage.py library that details the percentage of code executed by the test suite using the Python testing framework Pytest.

Why you should use Pytest to generate Code Coverage Report

Here’s why you should use Pytest to generate a code coverage report:

- It has a simple command line syntax for executing code coverage.

- It can be used both for local and remote testing.

- It differentiates between tested and untested lines of code with a codebase.

- A report of the code coverage score is generated in the command line, HTML, and other formats.

- It indicates areas of your code that need more testing.

- It is easy to use.

Read More: Pytest vs Unittest: A Comparison

How to Create a Pytest Coverage Report

This section will explore creating a pytest code coverage report, which can be outputted in the command-line terminal or HTML.

Prerequisites for creating Pytest Coverage Report

Before you create a Pytest coverage report, you need to meet the following requirements.

Step 1: Ensure you have both Python and Python package installer (pip) installed on your computer. Verify if Python has been installed by running this command in your terminal:

python3 --version

Verify if pip has been installed:

pip3 --version

Step 2: Create a virtual environment for your project.

python3 -m venv env

Step 3: Activate the virtual environment

source env/bin/activate

Step 4: Install Pytest within your project’s virtual environment.

pip install -U pytest

Step 5: Install the pytest-cov plugin.

pip install pytest-cov

Step 6: Ensure you have a project codebase and its test suite available.

As mentioned above, you’ll need a project codebase and test suite. However, a simple calculator class and test suite will be used in this section to show how a pytest coverage report can be created.

Filename: calc.py

""" This is a calculator module"""

class Calculator:

"""The Calculator class """

def validate_input(self, x, y):

if isinstance(x, int) and isinstance(y, int):

return [x, y]

raise TypeError('enter an integer value not string')

def add(self, x, y):

x, y = self.validate_input(x, y)

return x + y

def minus(self, x, y):

x, y = self.validate_input(x, y)

return x - y

def multiply(self, x, y):

x, y = self.validate_input(x, y)

return x * y

def divide(self, x, y):

x, y = self.validate_input(x, y)

if y == 0:

raise ZeroDivisionError('Enter a non-zero integer')

return x/yThe above file calc.py contains a Calculator class with five instance methods to be tested and a coverage report generated. The validate_input() method validates arguments before any arithmetic operation.

Other methods, add(), minus(), multiply(), and divide(), are used to carry out addition, subtraction, multiplication, and division respectively on validated arguments.

Filename: conftest.py

import pytest from calc import Calculator @pytest.fixture(scope='module') def calculator_object(): calculator = Calculator() yield calculator

A fixture function calculator_object() is contained in the conftest.py file. The fixture function yields an object of the calculator class.

Filename: tests/test_calculator.py

from calc import Calculator def test_add(calculator_object): assert calculator_object.add(4, 3) == 7, 'Expected result should be 7' def test_minus(calculator_object): assert calculator_object.minus(10, 5) == 5, 'Expected result should be 5' def test_multiply(calculator_object): assert calculator_object.multiply(4, 6) == 24, 'Expected result should be 24' def test_divide(calculator_object): assert calculator_object.divide(12, 2) == 6, 'Expected result should be 6'

The test_calculator.py file holds functions that test the functionality of the Calculator class in the calc.py file.

Note: All files are placed in the project’s root directory.

Create Pytest Code Coverage Report

There are two ways to create a pytest coverage report:

- Using the coverage.py library

- Using pytest-cov plugin

Method 1: Using the coverage.py library

Here’s how you can use coverage.py library

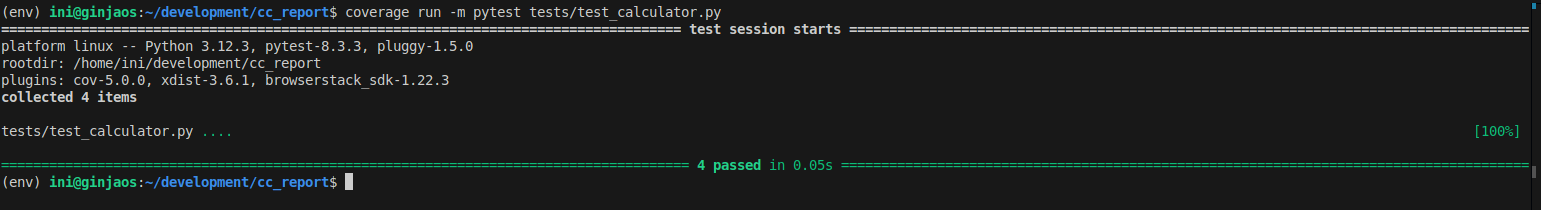

1. Run the test suite with the following command:

coverage run -m pytest tests/test_calculator.py

2. To display the coverage report in the terminal, run the command:

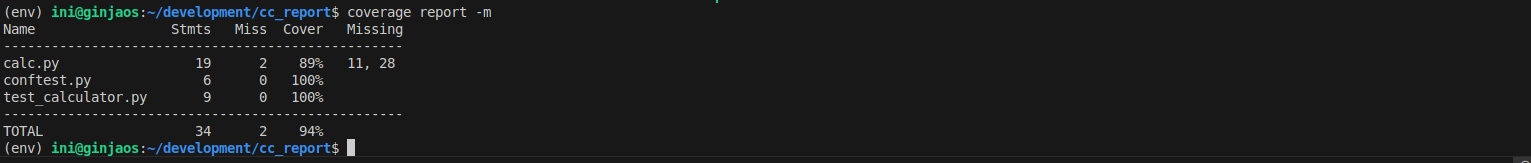

coverage report -m

3. To create a coverage report in HTML, do this:

coverage html

4. The report can be found in HTML format in the path htmlcov/index.html.

Method 2: Using the pytest-cov plugin

Another way to generate a coverage report in pytest is by using the pytest-cov plugin. The process is quite similar to how it’s done with coverage.py.

1. Run the command below to create report in the terminal:

pytest --cov

2. To generate a report in HTML, use this command:

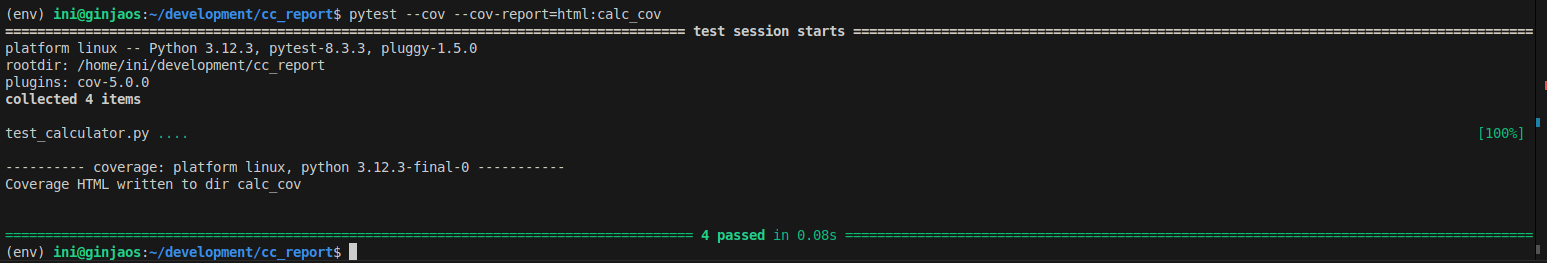

pytest --cov --cov-report=html:calc_cov

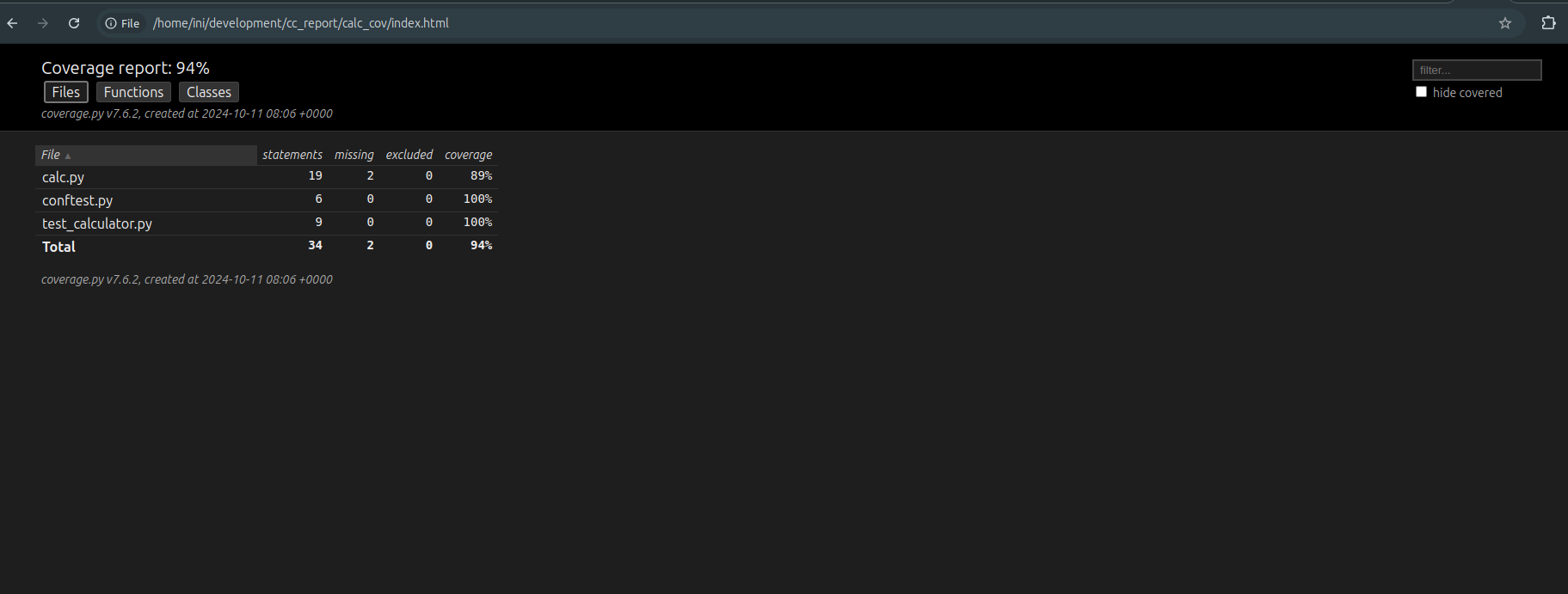

3. The additional command –cov-report=html:calc_cov specifies that the coverage report should be in HTML format and saved in a directory, calc_cov.

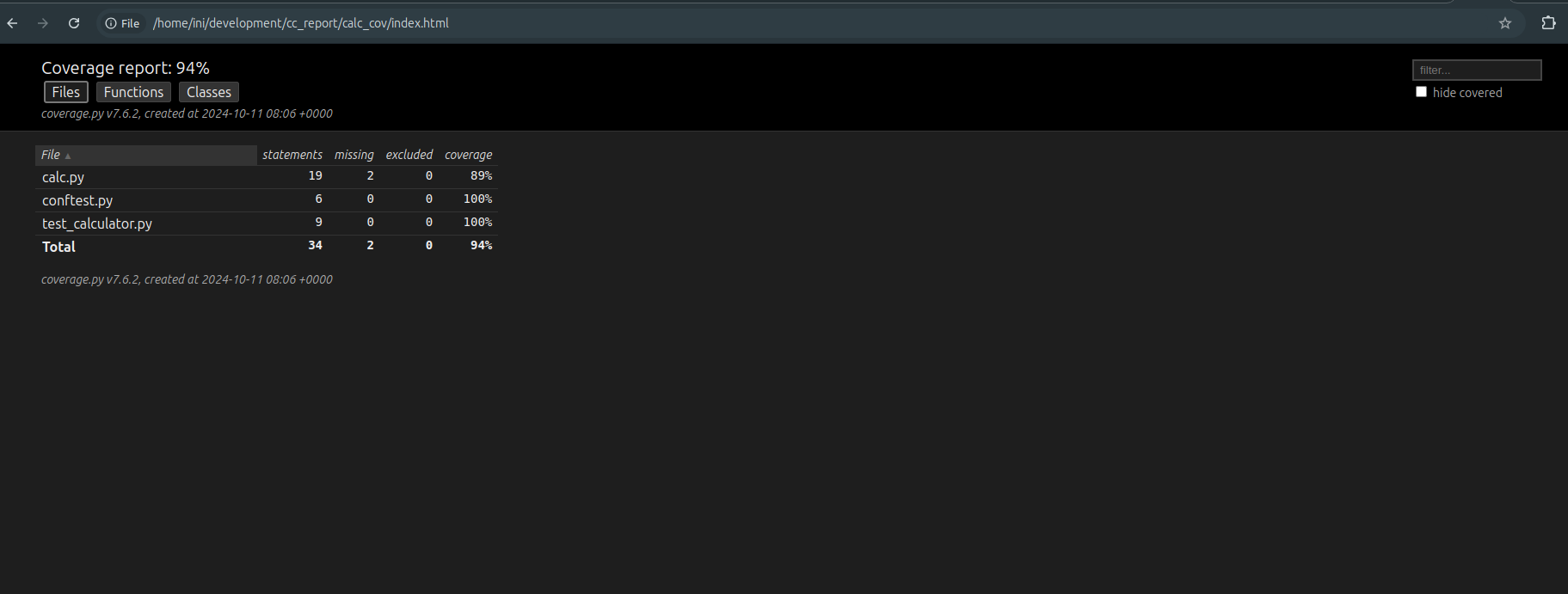

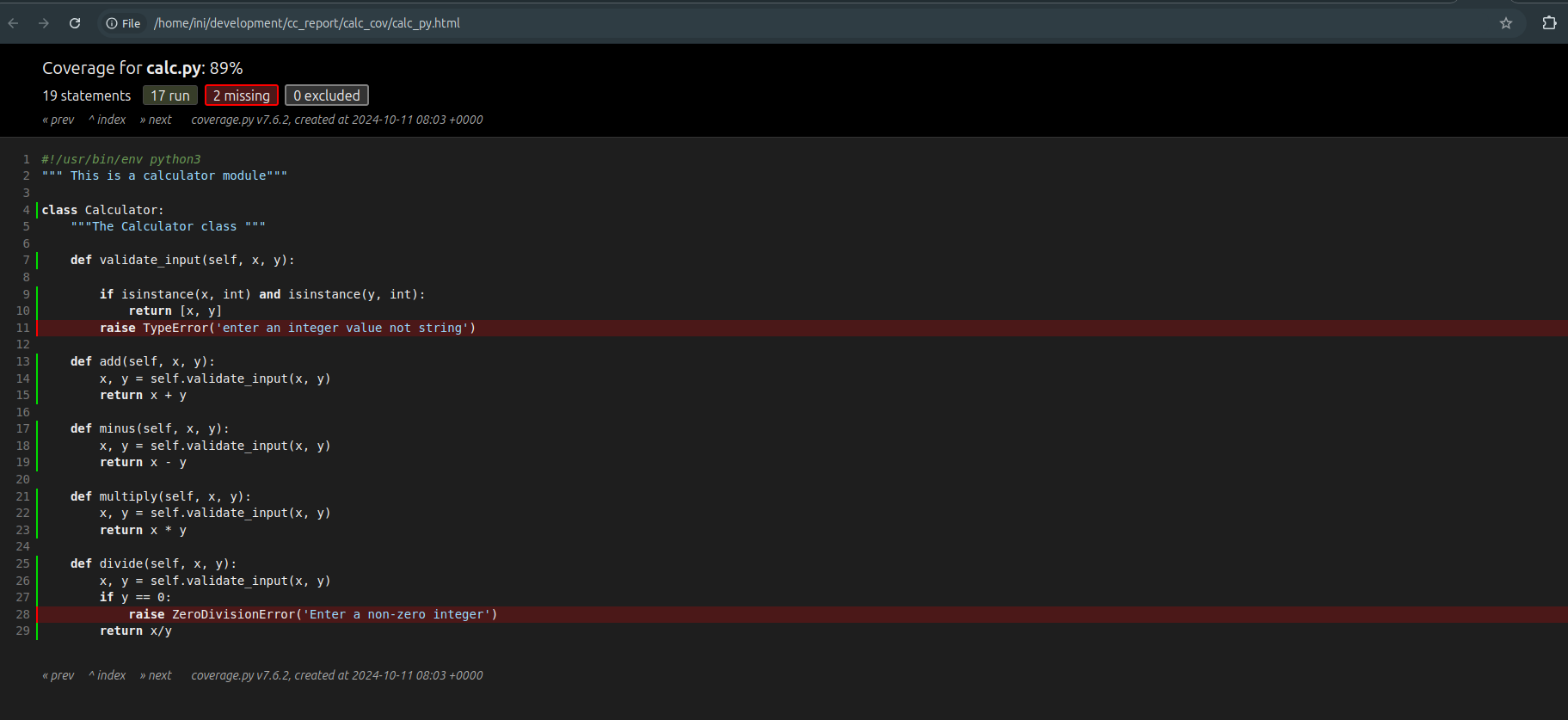

4. The report from the calc_cov/index.html all files except calc.py have 100% code coverage. Take a look at the calc_cov/calc_py.html file for details on the code coverage.

The result shows that two lines of code have been skipped during the test hence the coverage score of 89%. To ensure we have 100% code coverage more test cases should be written to test the codes in lines 11 and 28.

None of the previously written test cases checks if a TypeError and ZeroDivisionError are raised by the Calculator class methods.

Read More: How to enable Xcode Code Coverage?

Challenges in Creating and Maintaining the Pytest Coverage Report

Although creating code coverage reports is a good software engineering practice, there are also challenges this may pose.

Take a look at some of these challenges.

- As a codebase grows with the addition of more features, it slowly becomes difficult to maintain high code coverage report scores.

- A high code coverage score shows that thorough testing has been done. However, this does not completely rule out bugs in your application.

- Maintaining a high coverage threshold for a codebase across various teams can be very difficult with varying opinions on what a good threshold should be.

- Implementing code coverage for legacy code and optimizing for high scores can slow down overall development time.

- Miscellaneous or boilerplate code can affect the overall metrics of your code coverage report.

- Executing coverage for all parts of a large enterprise codebase can be a waste of time and seemingly unrealistic.

Pytest Code Coverage Tools

Pytest code coverage tools make the generation of coverage reports easier. Mentioned below are some local tools for generating pytest code coverage reports:

1. Pytest-cov:

The pytest-cov is a plugin for creating code coverage reports with pytest. It is bundled with support for Pytest and has command-line capabilities.

It is similar to coverage.py, but it offers more functionalities like subprocess support, xdist support, and consistent behavior with repeated use.

It can generate coverage reports on the terminal and other formats like HTML, XML, and JSON.

2. Coverage.py:

This is a popular Python library for outputting code coverage metrics for Python programs. It analyses the codebase to determine the lines of code that have been tested and those untested.

It is executed using a command line syntax and outputs reports on the terminal by default or in HTML if specified.

Read More: Top 15 Code Coverage Tools

Use BrowserStack Test Reporting and Analytics for Pytest Coverage Report

So far, the pytest code coverage report has only been executed on our local machine. You can also run these tests on a cloud platform such as BrowserStack Test Reporting and Analytics for more efficiency.

Here’s how you can use the BrowserStack Test Reporting and Analytics feature to create a coverage report.

Step1: Sign up on BrowserStack

Step 2: Install the BrowserStack SDK. Execute the following command in your project’s root directory:

python3 -m pip install browserstack-sdk browserstack-sdk setup --framework "pytest" --username "YOUR_USERNAME" --key "YOUR_ACCESS_KEY" pip show browserstack-sdk

After executing the above commands, a browserstack.yml file will be created in the root of your project directory.

Step 3: Modify your browserstack.yml config file. Open your browserstack.yml file and modify the code below as necessary:

userName: YOUR_USERNAME accessKey: YOUR_ACCESS_KEY buildName: "Your static build/job name goes here" projectName: "Your static project name goes here" CUSTOM_TAG_1: "You can set a custom Build Tag here" # Use CUSTOM_TAG_<N> and set more build tags as you need. framework: pytest testObservability: true browserstackAutomation: false

Step 4: Run your test suite with Test Reporting and Analytics. Prepend browserstack-sdk to the command you use to run your test. In this case, follow this code:

browserstack-sdk pytest --cov --cov-report=html:calc_cov tests/test_calculator.py

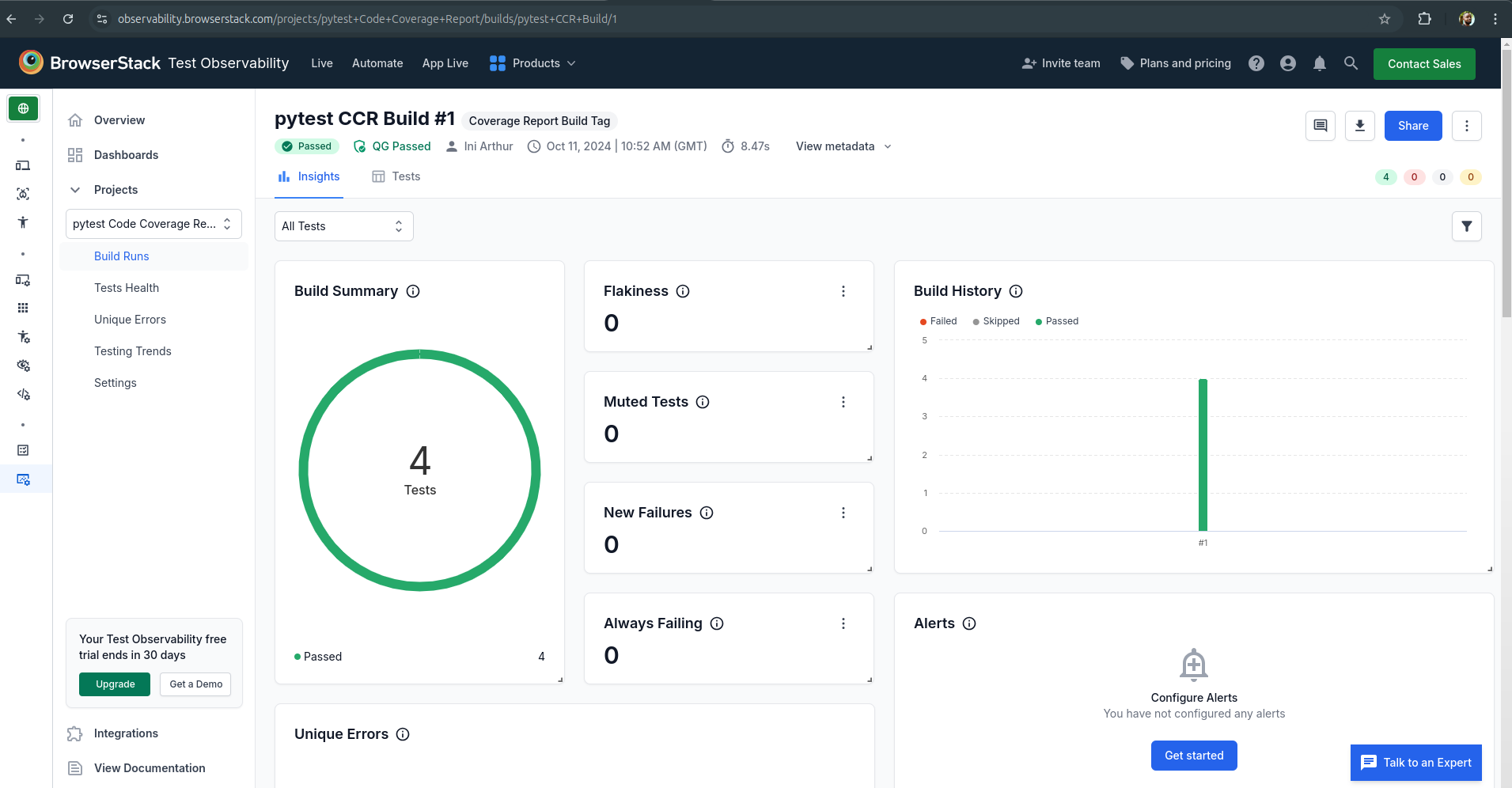

When you check your BrowserStack Test Reporting and Analytics tool, you should find a similar result on your dashboard like this.

Why Choose BrowserStack?

Using a cloud testing platform such as BrowserStack offers some of the following benefits:

- Tests Scalability: It is easier to scale tests on a cloud testing platform than on your local machine.

- Ease of Collaboration: You can easily share test results and collaborate with other team members when testing on the cloud.

- Latest Browsers and Dependencies Versions: Every time you use BrowserStack, you have access to the latest infrastructure and you don’t have to manage it on your own.

- Comprehensive Test Results: Testing tools like BrowserStack offer comprehensive reporting and advanced analysis of test records.

- Better Debugging Tools: BrowserStack offers several debugging tools, such as text logs, console logs, visual logs, video recordings, session replays, Appium logs, and more, which saves time when debugging tests.

- Real-Device Testing: Test on a vast real-device cloud to maximize test coverage. BrowserStack offers 3500+ real device and browser combinations.

Conclusion

Code coverage report is a vital metric for assessing the quality of a codebase. Pytest code coverage report highlights the extent to which a Python program has been tested by a test suite. It is important to aim for high coverage report scores even though it is not an indication that your codebase or application is bug-free.

Use Pytest code coverage tools coverage.py and pytest-cov plugin to create coverage reports in the terminal or better formats like HTML. Always ensure to remove or refactor redundant code exposed by coverage reports as your code base grows.

However, if you prefer more scalability through cloud-based tools to generate code coverage reports, use the right tool, like BrowserStack Test Reporting and Analytics, to generate pytest coverage reports seamlessly.