How to avoid False Positives and False Negatives in Testing?

By Somosree Roy, Community Contributor - December 12, 2024

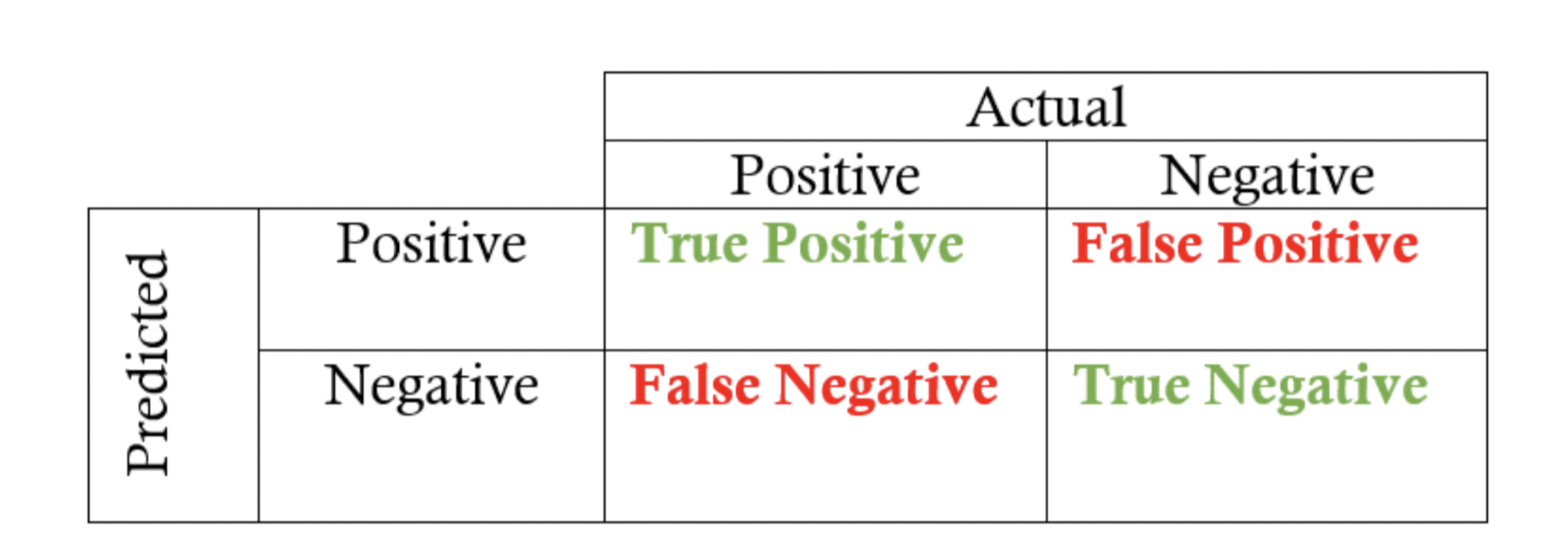

False positives and false negatives in software testing point to wrong test results.

A false positive takes place when a test identifies an issue that does not exist, while a false negative occurs when a test fails to detect an existing issue. Minimizing both false positives and false negatives is crucial when it comes to software testing. This guide discusses the ways to avoid false positives and false negatives in testing.

- What is False Positive in Software Testing?

- What is False Negative in Software Testing?

- False Positive and False Negative Examples

- Finding False Positive and False Negative

- How to Find False Positive

- How to Find False Negative

What is False Positive in Software Testing?

A false positive is a situation where the test incorrectly identifies an issue that does not actually exist.

False positives can cause project delays, missed deadlines, and increased expenses. The reason is that developers might have to put extra effort into tracking down these non-existing issues or even ignore real issues due to a lot of false alarms.

What is False Negative in Software Testing?

False negative refers to a situation when a test does not identify a bug or issue that actually exists.

This can mislead the developers and cause the release of flawed software that hinders user experience with operational failers, data breaches, etc. Therefore, it is very important to avoid false negatives to ensure software reliability and security.

False Positive and False Negative Examples

Here are examples of false positives and false negatives:

False Positive Example

A test case detects an error in which the sign-in form fails to submit the details when the ‘submit’ button is clicked.

Here, the login functions perfectly well. A delay in response caused the automation script to misinterpret it as an error.

False Negative Example

An automation test case validates that a ‘Reset Password’ feature sends an email to reset the password successfully.

However, the feature is actually broken, due to which the emails are not being sent. The test fails to detect because of a wrong validation logic in the script.

Finding False Positive and False Negative

Here’s how you can identify false positive and false negatives:

How to Find False Positive

False positives entail running a test that runs successfully. However, the test result shows there is an error.

Reducing false positives is imperative for any testing environment! For impeccable automated testing, you need to check the initial conditions just as thoroughly as the final ones. A test case attempts to carry out a specific set of operations with specific input data to ensure ‘sound’ output. So, if you want to see positive results, when it is actually positive, the test should behave the way it should. Hence, it is crucial to make sure that the test starts from where it should have and that there is no deviation in the predetermined state of the input.

Owing to this, enterprise testing can incur a lot of additional costs, hunting down bugs where they don’t even exist in the first place. Engineers might also lose faith in the test suite and end up removing critical features, considering they have errors when they don’t have any.

- The fallacy may lie either with the system or with the data.

- The input fed to the test might already exist, which can prompt the test suite to throw an error.

- The proportion of errors, that aren’t errors, will decrease if ensured that everything that could have an impact on your outcome is in place and functioning as it should.

How to Find False Negative

A bug passed with a green signal in a test. Imagine how critical it can be when your system actually has an error, but the testing indicates it is a go-ahead case. Imagine a false negative website running with loads of bugs at the backend, throwing multiple incorrect outputs when you browse it.

This is even more critical than false positives since you might be releasing the software with some crucial fallacy that you are omitting.

Injecting synthetic flaws into the software and confirming that the test case identifies the problem is one smart way of detecting potential false negatives. This is similar to mutation analysis. However, fault injection can be challenging without the support of a developer. Additionally, it is expensive to create, compile, deploy, and otherwise ensure that each error is caught by the test. In many instances, it is possible to achieve this by modifying the data or experimenting with other options.

- The aim should be to make a robust test and hence make it intentionally falter with multiple iterations.

- This process can’t be applied to every single automated case as this is highly costly and time-consuming.

- If adopting this measure to detect false positives, then go ahead with only the most critical ones.

Best Practices For Avoiding False Positives & False Negatives

It would be preferable to avoid false positives and false negatives rather than hunting for them through alterations because both of these distract the seamless testing. Have a thorough glance over the recommended techniques and practices to avoid them.

Write Better Test Cases

To avoid either of the falses, you can write test cases with utmost care and establish a reliable test plan and testing environment.

Ask the following before writing a test case:

- What portion of the code are you going to test?

- How many ways may that code go wrong?

- How would you know if anything unexpected occurred?

Try creating both positive and negative test cases when writing unit test scenarios (also, famously known as happy and unhappy path cases). Your tests won’t be deemed complete if you don’t provide test cases for both possible routes.

Follow-Up Read: Understanding Test Case Management

Review Test Cases

Prior to sending the test cases for automation, it’s crucial to keep track of all the modifications and review the test cases. Additionally, generic test cases serve no purpose; test cases should be tailored to the subject matter being tested, and appropriate errors and failures should be reported.

To write, organize, and manage test cases effectively, drive easy collaboration with your team, and carry seamless execution of tests across various environments, you can use tools like BrowserStack’s Test Management.

With such a tool, you can create, manage, and track all your test cases and test runs painlessly. You can obtain insightful reports on the test run status and integrate this tool with your test automation suites.

Minimize Complex Logic

Strive to keep automated tests as simple as possible and restrict the loop of logic implemented in the code. Since, when you write the code, it’s purely based on logic and doesn’t entail validation, the test may be susceptible to a lot of fallacies.

Randomization Of Test Cases

Randomizing your test cases is one strategy for detecting false negatives. This means that when testing your code, you should produce sufficiently randomized input data rather than hardcoding your input variable. Take the situation where you have a defect in your code and a function that computes the square root as an example.

For the square root of 25, your test provides the right answer, but for other values, it provides incorrect output. If you test this method with the square root of 25 every time, your unit tests can miss this error and the results can be biased. The ideal method of testing is to choose a number at random, square it, then test your function using the squared value as input and see whether it returns the same value.

- Randomization Of Unit Test Order: You can unit test your code in random order if all of its components are totally independent of one another. Since the state of one test may affect the state of another when it is state-driven, this is helpful.

- Change in Associated codes: A small modification in the source code should be accompanied by a change in associated codes. This will ensure the test performs correctly when the entire code is fed to it.

Read More: Test Automation Tool Checklist

Choose the Right Automation Environment

A testing environment for automation can make or break your tests. Choose trustworthy automation testing tools and be extremely careful when establishing the test environment.

A strong test plan and an effective automation tool can drastically reduce the number of false test results.

Why do False Positive and False Negative Occur?

Here are the reasons why false positive and false negatives occur

Reasons for False Positives

- Test cases with incorrect logic can lead to the identification of issues that do not exist.

- Variations in test environments caused by slow servers, network latency, etc., can trigger false alarms.

- If APIs or any external dependencies fail to function during the test, incorrect error detection can occur.

Reasons for False Negatives

- When test scripts fail to validate all relevant conditions, false negatives can occur.

- The failure of testing tools to detect specific types of defects can also lead to false negatives.

- Due to improper configurations, some tests can be skipped or disabled. This can also lead to the failure of issue of identification.

Conclusion

Automation testing has proven to be more time- and money-efficient, only when the false positives and negatives are taken care of. Among the two, false positives are more critical for visual, software, and app testing.

- Implementing iterative testing, introducing trustworthy and well-planned QA methods, and adhering to test optimization techniques prevent false positives and false negatives.

- Opting for automated mobile app testing with tried and trustworthy testing platforms – the ones which have ensured that the codes are unwavering and processes are robust.

Also, note that it’s crucial to test on a real device cloud that facilitates manual, automated, visual, parallel, and regression testing on a robust infrastructure.

On the BrowserStack infrastructure, you can access:

- BrowserStack Automate: Run your UI test suite in minutes with parallelization on a real browser and device cloud.

- BrowserStack App Automate: Automated mobile app testing on real mobile devices.

- Percy Visual Testing: Integrate Percy visual engine into your SDLC and review changes with every commit.

- BrowserStack Enterprise: Ship quality releases at the speed of Agile and enable your teams to test continuously, at scale.