Data virtualization streamlines real-time access to diverse data sources, enabling faster insights and reducing the need for complex data movement processes.

Overview

What is Data Virtualization?

Data virtualization is a technology that allows users to access, integrate, and manipulate data from multiple sources in real time without the need for physical data movement or replication.

Why is Data Virtualization Important?

- Enables on-demand access to live data from multiple sources.

- Speeds up insights by eliminating the need for data replication.

- Reduces infrastructure costs by avoiding redundant storage.

- Adapts easily to changing business needs and data sources.

Difference between Data Virtualization and ETL

Data virtualization provides real-time access to data without replication, while ETL physically extracts, transforms, and loads data into a separate repository.

Data Virtualization Use Cases

- Data Integration

- Real-time analytics and reporting

- Self-Serve BI (Business Intelligence)

- Identifying Business or Production Issues

- Mask volatility in Data Sources

- Test Data Management with Data Virtualization

What is a Data Virtualization Tool?

A data virtualization tool creates a virtual layer that enables to query, integrate, and analyze data from multiple sources, eliminating the need for physical replication or movement.

This comprehensive guide helps you understand the basics of data virtualization, its importance, the differences between ETL, data visualization, and data virtualization, benefits, challenges, and more.

What is Data Virtualization?

Data virtualization is a data management technique that enables real-time integration and access to data across multiple sources, providing a unified view without the need for physical data movement or replication.

It creates a unified access layer for enterprise data, seamlessly integrating information from diverse sources, including:

- Data warehouses

- Data lakes

- Databases

- Cloud and enterprise applications

- Data files

Data virtualization is one of the latest approaches to data integration. Earlier, ETL was used to solve these complexities. However it had a lot of complexities dealing with unstructured or diverse data.

Data virtualization is an agile, economical, and flexible alternative to the ETL method. This approach allows real-time access to the source system while keeping the data in the same location.

It uses the concept of data cloning; the idea behind it is to snapshot data and create a functional copy, the same as the physical copy. In a nutshell, data virtualization uses these virtual clones to allow users to gather information from diverse systems before transforming and delivering it in real time.

Why is Data Virtualization Important?

Data Virtualization is essential because:

- It provides real-time access to diverse data sources without replication.

- It reduces infrastructure costs and simplifies data management.

- It speeds up decision-making by unifying data from multiple sources.

- It increases agility, allowing quick responses to changing data needs.

Common Data Sources Virtualized Through Data Virtualization Tools

Data virtualization tools connects and integrates various data sources, enabling seamless access and analysis. Common data sources include:

- Relational Databases: Traditional databases like Oracle, MySQL, Microsoft SQL Server, and PostgreSQL.

- Big Data Systems: Platforms such as Hadoop, Apache Spark, and NoSQL databases like MongoDB and Cassandra.

- Cloud Data Sources: Cloud-based storage and databases, including Amazon S3, Google BigQuery, Microsoft Azure SQL Database, and Snowflake.

- Enterprise Applications: Systems like SAP, Salesforce, Workday, and Oracle ERP.

- Data Warehouses: Centralized repositories like Amazon Redshift, Google Cloud BigQuery, and Teradata.

- Streaming Data Sources: Real-time data feeds from Apache Kafka, RabbitMQ, or IoT data streams.

- Flat Files: CSV, JSON, XML, or Excel files stored locally or on shared network drives.

- Web Services and APIs: EST and SOAP APIs providing real-time or on-demand data from external systems.

- IoT Data Sources: Data collected from Internet of Things (IoT) devices, sensors, and connected systems.

- Unstructured Data: Sources such as emails, logs, social media feeds, or documents stored in content management systems.

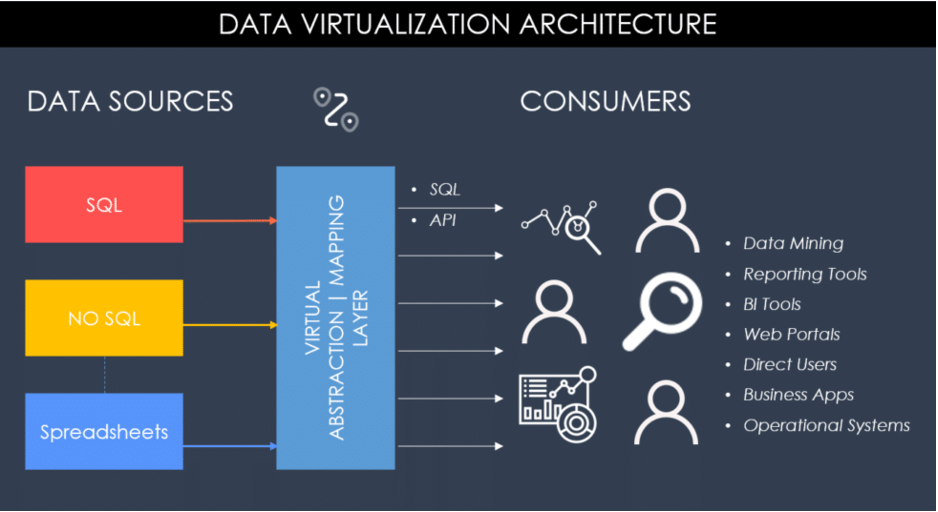

Layers in Data Virtualization Architecture

Data virtualization architecture typically includes the following key layers:

1. Data Source Layer

- Connects to diverse data sources (databases, cloud storage, applications).

- Manages data access without moving or replicating it.

2. Data Abstraction Layer

- Provides a unified, virtualized view of data across sources.

- Hides underlying complexity, making data easier to access.

3. Data Transformation and Integration Layer

- Transforms, cleans, and integrates data in real-time.

- Applies business logic and rules for consistent data presentation.

4. Data Access and Delivery Layer

- Delivers data to users, applications, and analytics tools.

- Supports various access methods (e.g., SQL, APIs) for flexibility.

5. Metadata and Governance Layer

- Manages metadata, data lineage, and governance policies.

- Ensures data security, compliance, and quality control.

6. Security Layer

- Controls access, encryption, and authentication.

- Protects sensitive data across virtualized sources.

How does Data Virtualization Work?

Here is an overview of how data virtualization works:

Step 1: Data Abstraction

Abstracts data from various sources into a unified virtual layer for seamless access.

Step 2: Data Integration

Integrates data from multiple systems (databases, cloud, APIs) without physical movement.

Step 3: Querying and Transformation

Allows real-time querying using SQL, with on-the-fly transformation and federation of data.

Step 4: Real-time Access

Provides real-time or near-real-time access to up-to-date data without needing batch processing.

Step 5: Data Governance and Security

Centralizes security and governance, managing access control and compliance across all data sources.

Step 6: Performance Optimization

Improves performance through caching, query optimization, and data indexing.

Step 7: User Access

Gives access to users by providing a unified view of data, regardless of its source.

Benefits of Data Virtualization

Here are some of the notable benefits of Data Virtualization:

- The virtual clones use minimal storage in comparison to the source data.

- Snapshotting the source data is a very fast process that can be done in seconds.

- Connectivity with various types of data sources is possible with data virtualization. Data virtualization can be used with structured sources such as relational database management systems, semi-structured sources such as web services, and unstructured data sources such as file documents.

- Simplifies data management, and reduces system workload by creating virtual clones.

- Usually only requires a single pass data cleansing operation. Integrating external data cleaning and data quality solutions can also be supported using APIs.

- Data Virtualization is highly adept at reading and transforming unstructured or semi-structured data.

- Depending on the source and type of data, data virtualization solutions have built-in mechanisms for accessing data. This provides transparency for users who are generating analytics or reports from these data sources.

- Since users only have access to virtual clones, the source data is secure, and no unintentional changes can be made to it.

- Data virtualization allows the user access to near real-time data, which means any downstream analytics done with the data are able to illustrate information accurately at the time of generation.

Challenges in Data Virtualization

Here are some of the challenges of Data Virtualization:

- Badly designed data virtualization platforms cannot cope with very large or unanticipated queries.

- Setting up a data virtualization platform can be very difficult, and the initial investment costs can be high.

- Searching for appropriate data sources for analytics purposes, for example, can be very time-consuming.

- Data virtualization isn’t suitable for keeping track of historical data; for such purposes, a Data Warehouse is more suitable.

- Direct access to systems for reporting and analytics via a data virtualization platform may cause too much disruption and lead to performance deterioration.

Also Read: Data Driven Framework in Selenium

Difference between ETL and Data Virtualization

ETL (Extract, Transform, Load) and Data Virtualization are both data integration approaches, but they differ significantly in how they access, transform, and deliver data. Here’s a comparison:

| Feature | ETL | Data Virtualization |

|---|---|---|

| Data Movement | Physically moves and stores data in a target location | Accesses data in real-time without movement |

| Latency | High latency due to batch processing | Low latency, providing real-time access |

| Data Storage Requirements | Requires additional storage for transformed data | No additional storage required |

| Flexibility | Limited flexibility; data structure changes require ETL rework | Highly flexible, adapts to source changes |

| Use Cases | Historical analysis, data warehousing | Real-time reporting, on-demand data access |

Difference between Data Virtualization and Data Visualization

Data Virtualization and Data Visualization are often confused, but they serve very different purposes. Data Virtualization integrates and provides access to data across sources, while Data Visualization focuses on presenting data insights in a visual format.

| Feature | Data Virtualization | Data Visualization |

|---|---|---|

| Purpose | Provides unified access to multiple data sources | Presents data insights visually |

| Functionality | Data integration and real-time access | Graphs, charts, and dashboards |

| User Base | Primarily data scientists, data engineers, analysts | Business users, data analysts, executives |

| Data Interaction | Data is accessed and queried from source systems | Data is displayed and interpreted visually |

| Tools Examples | Denodo, TIBCO, Cisco Data Virtualization | Tableau, Power BI, Looker |

Data Virtualization Use Cases

Here are some of the notable use cases of data virtualization:

Use Cases of Data Virtualization

- Data Integration

- Real-time analytics and reporting

- Self-Serve BI (Business Intelligence)

- Identifying Business or Production Issues

- Mask volatility in Data Sources

- Test Data Management with Data Virtualization

Data Integration

Data virtualization enables seamless integration across various data sources without the need for physical data movement. This is especially useful for organizations that manage data from multiple databases, cloud platforms, and applications.

By creating a unified virtual layer, data virtualization allows users to access and query data as if it’s in a single location. It makes it easier to consolidate information for enterprise-wide analytics, real-time decision-making, and reporting. It simplifies complex integrations, reduces latency, and supports agile data environments where quick access to diverse data is crucial.

Real-time analytics and reporting

Data virtualization can be used to gain real-time access to systems and gather data from various sources to create sophisticated dashboards and analytics for purposes such as sales reports. These analytics improve business insight since they access real-time data, integrate it, and are able to generate intuitive infographics.

Self-Serve BI (Business Intelligence)

With data virtualization, business users and analysts can directly access and explore data without relying on IT teams to provide it.

Through a virtual data layer, users can conduct queries, create reports, and derive insights from multiple sources in real-time. This self-serve BI capability empowers users to make data-driven decisions faster and provides data independence.

Identifying Business or Production Issues

Virtual data clones can be used to carry out Root Cause Analysis (RCA) for issues. Changes can also be implemented in these virtual copies in order to validate and ensure that they don’t have any adverse effects prior to implementing changes in the data source.

Mask volatility in Data Sources

During volatile times such as mergers or acquisitions or even when a business is trying to begin outsourcing initiatives, they may use data virtualization as an abstraction layer to mask the changes being undertaken in data sources and applications.

Test Data Management with Data Virtualization

Data virtualization is an effective approach to Test Data Management (TDM), which involves generating and managing data for testing, training, and development purposes. In shared database environments, direct access to data can lead to conflicts, security risks, and outdated or irrelevant data usage.

With data virtualization, virtual copies of data can be created for different teams, minimizing conflicts and preventing data pollution. Teams can securely access test data via a virtual layer, apply data masking for sensitive information, generate new test datasets, and conduct analytics or automated tests—without needing direct access to the primary data source.

Moreover, the use of test analytics and test reporting tools, like BrowserStack Observability can further enhance the value of data virtualization by providing deep insights into test data quality and performance metrics.

These tools help identify trends, pinpoint issues, optimize testing processes, and ensure higher-quality outcomes in software development and deployment.

Common Data Sources used for Data Virtualization

Common data sources used for data virtualization include:

| Data Source Type | Examples | Description |

|---|---|---|

| Relational Databases | Oracle, MySQL, SQL Server, PostgreSQL | Structured, transactional data crucial for business applications. |

| NoSQL Databases | MongoDB, Cassandra, Couchbase | Unstructured or semi-structured data used in big data and real-time applications. |

| Data Warehouses | Snowflake, Amazon Redshift, BigQuery | Historical data storage for analytics and reporting. |

| Cloud Storage | AWS S3, Google Cloud Storage, Azure Blob Storage | Large volumes of semi-structured or unstructured data. |

| Big Data Platforms | Hadoop, Apache Spark, HDFS | High-volume, high-velocity data management for advanced analytics. |

| Enterprise Applications | Salesforce, SAP, Oracle ERP | Business data across CRM, HR, finance, and operations. |

| APIs and Web Services | REST APIs, SOAP, GraphQL | Real-time integration of data from external sources or applications. |

| Spreadsheets and Flat Files | Excel, CSV, JSON, XML | Ad-hoc or smaller datasets in various formats. |

| Streaming Data Sources | Apache Kafka, Amazon Kinesis | Real-time data streams for low-latency insights. |

Features of a Data Virtualization System

Data virtualization systems provide a powerful framework for organizations to access, manage, and analyze data from multiple sources without the need for complex data movement.

Here is a look at the key features that make data virtualization an essential tool for modern data management:

- Data Integration Across Sources: Combines data from multiple sources, such as databases, cloud storage, and applications, without needing to physically replicate data.

- Real-Time Data Access: Enables on-demand, real-time data retrieval, providing faster insights and supporting agile decision-making.

- Unified Data View: Offers a single, virtualized view of data, abstracting away the complexity of multiple data sources for users.

- Data Transformation and Aggregation: Allows data to be transformed, joined, and aggregated as needed, ensuring consistent data presentation for analytics and reporting.

- Metadata Management: Manages metadata, data lineage, and schema mapping, making it easy to understand data’s origin, context, and structure.

- Security and Access Control: Protects data through robust authentication, authorization, and encryption capabilities, ensuring only authorized access.

- Data Caching and Performance Optimization: Improves query speed and reduces latency by using caching and other optimization techniques.

- Data Governance and Compliance: Supports regulatory compliance by managing data usage, quality, and access policies, crucial for data-sensitive industries.

- Scalability and Flexibility: Adapts to growing data volumes and diverse data types, enabling the system to scale as data needs increase.

- Support for Multiple Query Languages: Offers flexibility by supporting SQL, REST APIs, GraphQL, and other query languages, meeting the needs of various users and applications.

Best Tools for Data Virtualization

Here is an overview of some of the top tools in the industry, along with their key features:

1. Denodo

Denodo is a comprehensive data virtualization platform widely used in large enterprises for real-time data access and analytics, known for its robust performance and security features.

Key Features:

- Advanced data caching for high-speed access

- Real-time data integration across multiple sources

- Detailed metadata management and data lineage tracking

- User-friendly data catalog for easy data discovery and exploration

2. TIBCO Data Virtualization

TIBCO Data Virtualization integrates and provides access to diverse data sources, focusing on scalability and flexibility for complex enterprise needs.

Key Features:

- Supports integration with various data sources and formats

- Self-service data access for end-users

- Built-in security and data governance for controlled access

- Data caching and query optimization for faster insights

3. IBM Cloud Pak for Data (Data Virtualization Module)

This module of IBM’s Cloud Pak for Data platform integrates data across hybrid cloud environments, supporting AI-driven insights for enterprise data access.

Key Features:

- Unified data access across on-premises and cloud sources

- AI-powered insights for data-driven decision-making

- Robust security and compliance features for enterprise use

- High scalability and seamless integration with IBM’s AI tools

4. Cisco Data Virtualization (formerly Composite Software)

Cisco Data Virtualization focuses on simplifying data access across complex enterprise environments, excelling in data consolidation and transformation.

Key Features:

- Real-time data integration for immediate access

- Extensive support for diverse data sources

- Optimized queries to enhance performance

- Metadata management and data governance tools

5. Microsoft SQL Server PolyBase

PolyBase, a feature within Microsoft SQL Server, allows seamless integration with external data sources, ideal for organizations in the Microsoft ecosystem.

Key Features:

- Access to big data sources and cloud integration

- Compatibility with Azure services for cloud scalability

- Efficient management of large datasets

- Support for multiple data formats and external sources

6. Red Hat Data Virtualization

Red Hat Data Virtualization is an open-source solution tailored for hybrid cloud environments, providing scalable data integration capabilities.

Key Features:

- Real-time data integration and transformation

- Support for diverse data sources, including cloud and on-premises

- Containerized deployments for scalability in cloud environments

- Tools for metadata management and data lineage tracking

Conclusion

Data virtualization offers substantial advantages over the conventional ETL method in terms of cost reduction and productivity gains. Data virtualization platforms provide faster data preparation, use snapshots of data that require lower disc space costs and enable the use of data from various sources for analytics or other purposes.

Moreover, data virtualization systems can be highly sophisticated with features such as data cleaning solutions which can help the system self-manage, integrated governance, security, and the ability to roll back any changes made. Data virtualization is very diverse, with several practical applications, and businesses should make sure to explore the possible solutions it could provide for their needs.

To further enhance your data-driven efforts, BrowserStack Test Observability offers robust test data management and analytics, giving your team end-to-end visibility into testing quality and performance.