Introduction

Carousell, the largest consumer marketplace in APAC, faced a significant challenge: releasing new features weekly while maintaining quality in the region’s fast-evolving mobile market. Release days were stressful, with unpredictable issues, manual testing bottlenecks, and long hours for the QA team. To streamline their process, Carousell needed stable automation and continuous testing within their CI/CD pipeline. By adopting BrowserStack’s Real Device Cloud, they scaled test automation 13x, reduced regression testing time from a day to an hour, and eliminated the chaos of last-minute fixes. Today, Carousell delivers high-quality releases with confidence, ensuring a seamless experience for its users.

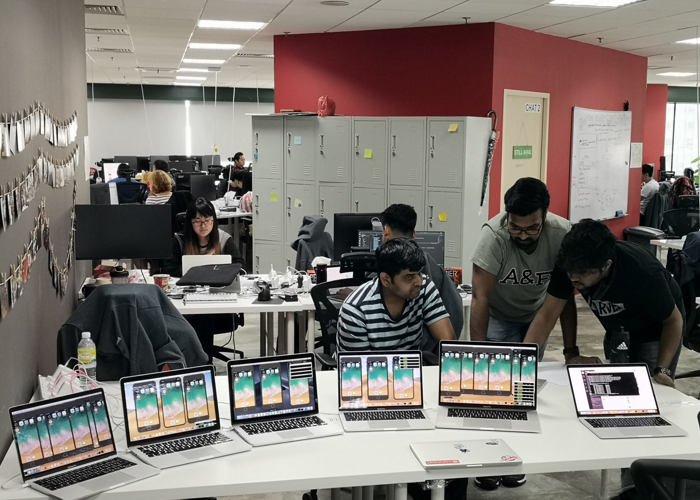

Stressful release days

Make release days stress-free.

To meet consumer expectations in the fastest growing mobile market in the world, brands in APAC have to be more than “mobile-first”. They need to keep up with a highly dynamic—and fragmented—mobile landscape.

The largest consumer marketplace for APAC users, Carousell, was releasing new features and updates every week. But release days were stressful for the whole team.

“Everyone dreaded Fridays because it meant unpredictability—about issues we’d find, fixes we’d need to make, and how late it’d be before everyone got home,” says Martin Schneider, Delivery Manager and Senior Test Engineer at Carousell.

To find and fix issues well before release days, Carousell needed foresight into quality throughout their release cycles. They recognized the need for stable automation and continuous testing in their CI/CD pipeline. But getting to this stage was a twofold challenge.

“When we first began automation, it couldn’t really stick,” says Schneider. “With our setup, each team had a dedicated test engineer to test their builds and features.” Carousell changed its ‘dedicated QA’ setup so a group of testers could work exclusively on a stable, high-coverage test automation framework.

However, given the rapid pace of engineering, test engineers were kept too busy keeping up through manual testing. Infrastructure upkeep took up any time that was left.

“We ran regression tests once a day on a device lab that was difficult to maintain,” says Schneider. “Making the decision about adding new devices, updating the existing ones, removing the ones that weren’t needed—the small issues became cumbersome once they added up,” he says.

To give their testers time to focus on scaling the automation framework, Carousell tried to outsource the infrastructure to a device farm offered by their existing cloud services provider. But, “There were restrictions with this solution,” says Schneider. “To test a build, we had to re-upload the app and the tests. For a single test run, the setup and teardown of the test device would take more than five minutes. It added to our turnaround time,” he says.

Carousell needed quick, deterministic test feedback throughout the release cycle. To get there, they needed to scale their automation framework—and find a stable, high-performing test infrastructure to run it on.