Test authoring is a crucial step in software testing. It defines how QA resources are utilized and influences the testing tech stack. Effective test authoring requires a structured approach and disciplined efforts to ensure comprehensive test coverage.

Overview

What is Test Authoring?

Test Authoring is the process of designing and creating test cases that validate the functionality, performance, and reliability of a software application.

Key Aspects of Test Authoring:

- Clarity and Precision: Tests should be clearly defined, with concise steps and expected outcomes to avoid ambiguity.

- Reusability: Create modular test cases that can be reused across different scenarios, enhancing efficiency and maintainability.

- Traceability: Ensure tests are linked to specific requirements or user stories to verify they are covering the right features.

- Automation Compatibility: Write tests in a way that they can be easily automated, enabling quicker feedback loops.

- Comprehensiveness: Cover positive, negative, edge, and boundary cases to ensure thorough test coverage.

- Maintainability: Structure tests to be easily updated as the software evolves, minimizing technical debt.

Evolution of Test Authoring:

- Manual Testing Era: Test cases were created manually, often resulting in long, tedious documentation and inconsistent coverage.

- Advent of Automation: The rise of automation tools like Selenium transformed test authoring, allowing for faster execution and repeated test runs.

- Behavior-Driven Development (BDD): Introduction of BDD practices led to more collaboration between developers, testers, and stakeholders, resulting in tests written in natural language (e.g., Gherkin syntax).

- Test-Driven Development (TDD): TDD promoted the practice of writing tests before code, ensuring that tests are integrated from the start of the development process.

- AI and Machine Learning: AI-powered testing tools now assist in test authoring, automatically generating test cases and optimizing test coverage.

This guide explores its significance, key components, and best practices.

What is Test Authoring?

Test case authoring is the process of designing and documenting individual test cases. It’s a crucial step in the software testing life cycle, bridging the gap between requirements/design specifications and actual testing.

It involves clearly defining the steps a tester will take to verify a specific feature, functionality, or software application requirement. A well-authored test case ensures consistent, repeatable, and effective testing.

Importance of Test Authoring

Test case authoring is crucial in software development and quality assurance. It’s a fundamental activity that directly impacts a software project’s quality, reliability, and success.

Below are the key reasons why Test Authoring is important:

- Ensures Software Quality and Reliability: Provides a structured approach to validating functionality, detecting defects early, and reducing critical flaws before release.

- Improves Test Coverage and Effectiveness: Uses proven techniques to cover all functionalities, edge cases, and failure points, enhancing defect detection.

- Facilitates Consistent and Repeatable Testing: Standardized test cases ensure uniform execution, reproducibility, and reliable defect identification.

- Enables Efficient Test Management and Execution: It provides clear instructions, facilitates test progress tracking, and simplifies automation for long-term efficiency.

- Enhances Communication and Collaboration: Serves as a shared reference for testers, developers, and stakeholders, ensuring clear expectations and traceability.

- Reduces Development Costs and Time: Identifies defects early, minimizing rework, streamlining testing, and accelerating development cycles.

- Improves Maintainability and Reusability: Acts as software documentation, ensuring easy updates, long-term relevance, and reusability across projects.

Key Components of Test Authoring

The following are key components of test case authoring:

- Test Case Identification and Title: A clear, concise identification method (e.g., a unique test case ID or reference number). Simple, meaningful titles indicate what the test case validates.

- Test Scenario: Provide a high-level description or explanation of the functionality or aspect of the application the test case will verify.

- Preconditions/Prerequisites: Conditions or configurations are necessary before running the test. Examples: specific test data, particular software version, environment setup, or logged-in user account.

- Test Steps: The tester needs to execute detailed, step-by-step actions during testing. It must be precise and easy to follow and understandable to all stakeholders, including entry-level testers.

- Test Data: Input values or data required to execute each step in the test case. Includes under what conditions or ranges inputs should be tested.

- Expected Results/Outcomes: Defined and explicit outcomes must occur if the system or feature works as intended. Clarifies precisely what constitutes a pass or fail.

- Actual Results (captured during execution): Outcomes recorded during test execution against expected results and used to capture discrepancies clearly.

- Postconditions: Conditions or states the software/system/application should ideally be in after test execution. Helps determine readiness for subsequent tests or recovery for failures.

- Priority and Severity: This reflects the importance or timeline urgency (high, medium, low). It often indicates the potential impact a failed test case may have on end users or business functions.

- Traceability: Associating test cases with corresponding business requirements, user stories, or functional specifications. Provides clarity, maintains proper test coverage, and simplifies troubleshooting and risk management.

- Test Environment: Detailed description and setup instructions of environments (development, staging, production) in which tests should be executed, including hardware/software configuration requirements.

- Test Tools and Techniques: Identification of automated and manual tools or techniques to execute tests to improve accuracy, efficiency, or repeatability.

Also Read: Test Case Reduction and Techniques to Follow

Roles and Responsibilities of a Test Case Author

A Test Case Author is responsible for defining, writing, validating, and maintaining detailed test cases to ensure that software meets specified requirements and performs reliably.

Below are the key roles and responsibilities of a test author:

- Understanding and Analyzing Requirements: Reviewing requirement documents, user stories, and acceptance criteria while clarifying doubts with stakeholders.

- Creating Test Plans and Scenarios: Documenting high-level test scenarios and ensuring structured and comprehensive test coverage.

- Test Case Authoring and Documentation: Writing clear and detailed test cases with preconditions, execution steps, test data, expected results, and severity classification.

- Reviewing and Revising Test Cases: Conducting peer reviews for accuracy and updating test cases based on feedback and requirement changes.

- Traceability Management: Linking test cases to requirements and maintaining traceability metrics for tracking and reporting.

- Test Execution and Reporting: Running test cases, documenting actual results, and identifying and reporting defects promptly.

- Collaboration and Communication: Working closely with developers, product owners, and stakeholders for requirement clarification and issue resolution.

- Contributing to Automation Efforts: Identifying test cases for automation and collaborating with automation teams for script development.

- Maintaining Test Artifacts and Documentation: Keeping test cases organized, accessible, and reusable for future reference.

- Continuous Improvement: Enhancing test processes, adopting new methodologies, and staying updated with industry best practices.

Different Types of Test Authoring

Test authoring can be classified based on the level of testing, techniques used, objectives, and how test data is managed. These approaches ensure comprehensive test coverage and efficiency in software testing.

1. Test Authoring Based on Testing Level

Test authoring varies depending on the scope of testing, from individual components to full system validation.

- Unit Test Authoring: Focuses on testing individual components, such as functions, methods, or classes. Typically automated and executed by developers using unit testing frameworks.

- Integration Test Authoring: Verifies interactions between different modules or components, ensuring seamless communication and data flow. Often automated and executed by developers or integration testers.

- System Test Authoring: Evaluates the entire application, verifying end-to-end functionality and compliance with system requirements. Usually performed by QA testers using black-box testing techniques.

- Acceptance Test Authoring: This process ensures that the application meets business requirements and is ready for release. It often involves business analysts, product owners, and end-users in User Acceptance Testing (UAT).

Must Read: Mastering UAT Test Scripts

2. Test Authoring Based on Testing Technique

Different testing techniques define how test cases are written and executed based on internal knowledge of the system.

- Black-Box Test Authoring: This method tests software functionality without internal code knowledge, relying on requirements and specifications. It uses techniques such as boundary value analysis and decision table testing.

- White-Box Test Authoring: Examines the internal structure and logic of the code, ensuring full coverage of conditions, branches, and paths. Uses techniques like statement coverage and condition coverage.

- Grey-Box Test Authoring: Combines black-box and white-box approaches, using partial knowledge of the internal code to improve test design.

3. Test Authoring Based on Test Objective

Test cases are designed with specific goals, such as functionality validation, performance assessment, or regression testing.

- Functional Test Authoring: Validates that features and functionalities work as expected based on requirements.

- Non-Functional Test Authoring: Assesses performance, security, usability, and scalability aspects of the application.

- Regression Test Authoring: Ensures new code changes do not break existing functionality by re-executing test cases. Often automated.

- Exploratory Test Authoring: Focuses on uncovering defects through unscripted, experience-based testing to identify unexpected behaviors.

4. Test Authoring Based on Test Data

Test data plays a crucial role in verifying system behavior under different conditions.

- Data-Driven Test Authoring: Uses a single test logic with multiple input data sets to validate different scenarios efficiently.

Process of Test Case Authoring

Test case authoring is a structured, iterative process focused on designing, documenting, and validating test cases based on project requirements. The process ensures thorough functionality, reliability, and usability coverage to achieve high-quality products.

Here’s a detailed description of the typical steps and process involved:

1. Requirement Analysis: Analyze functional requirements, user stories, and acceptance criteria to understand testing needs. Clarify any ambiguities with stakeholders to define a clear testing scope.

Output: Well-defined test objectives and a clear understanding of testing requirements.

2. Defining Test Scenarios: Identify high-level test scenarios that cover key functionalities and business processes. Ensure scenarios represent real-world user interactions.

Output: A structured list of test scenarios ensuring comprehensive coverage.

3. Test Case Design & Authoring: Develop detailed test cases, including test steps, expected outcomes, preconditions, and test data. Ensure each test case is precise, concise, and easy to execute.

Output: Well-documented and structured test cases ready for execution.

4. Test Case Review & Validation: Conduct peer reviews to validate test cases for accuracy, completeness, and alignment with requirements. Gather feedback from stakeholders to refine test cases.

Output: Reviewed and approved test cases with minimal defects.

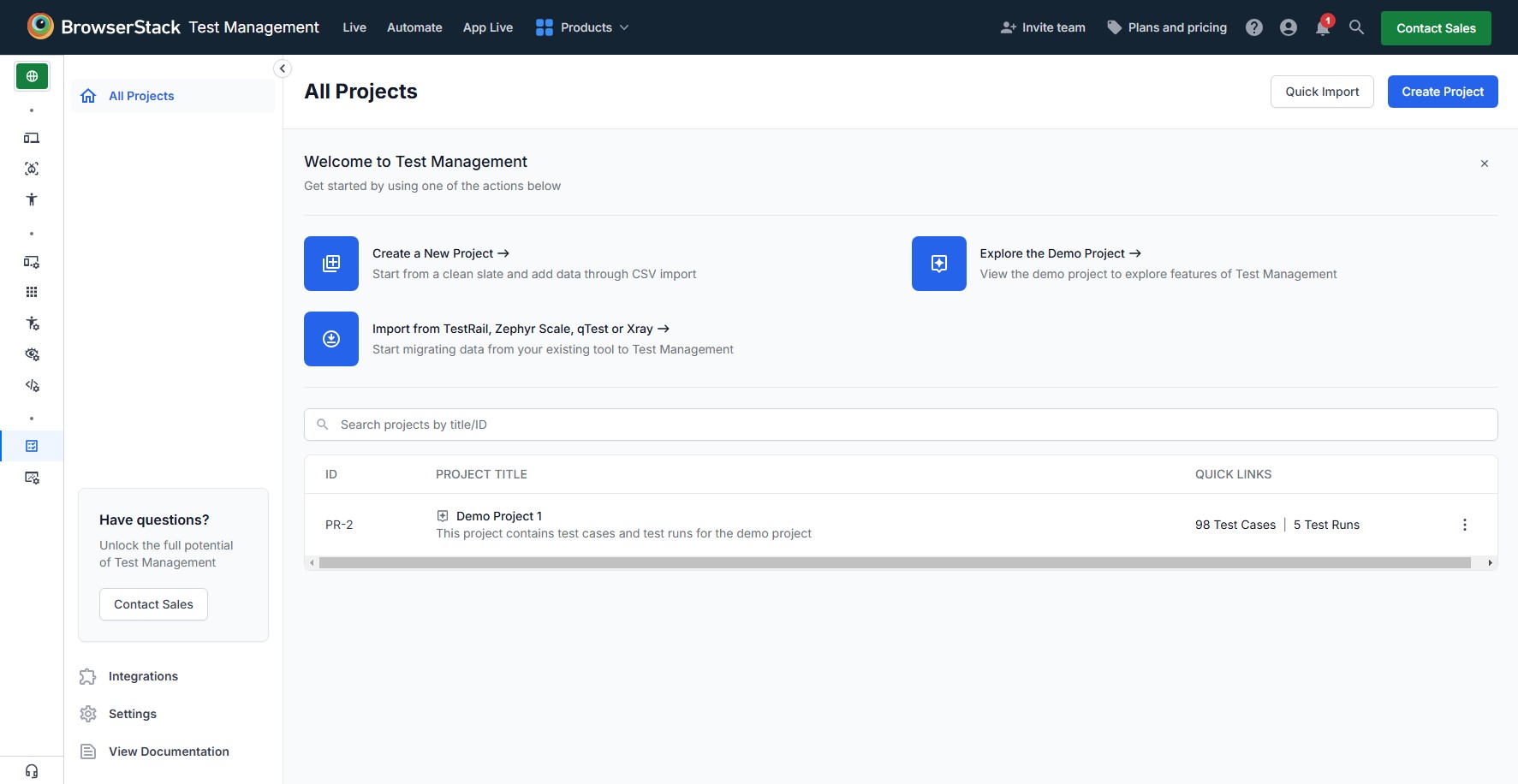

5. Organizing & Managing Test Cases: Categorize test cases based on priority, functionality, and modules. Store them in a centralized test management system for easy accessibility and tracking.

Output: An organized test case repository that streamlines execution and maintenance.

6. Traceability & Coverage Assurance: To ensure complete coverage, map test cases to requirements using a traceability matrix. Identify any missing test cases to fill gaps.

Output: A validated traceability matrix confirming that all requirements are covered.

7. Test Execution & Defect Management: Execute test cases manually or using automation tools. Capture actual results, log discrepancies as defects, and document severity, priority, and reproduction steps.

Output: Detailed test execution reports and defect logs for issue resolution.

Also Read: Top Defect Management Tools

8. Test Case Maintenance & Updates: Regularly review and update test cases to align with evolving requirements and product changes. Modify test data, scripts, and scenarios as needed.

Output: An updated and relevant test suite that remains effective over time.

9. Continuous Improvement & Optimization: Analyze test execution results, defect trends, and process efficiency. Implement improvements, refine test strategies, and incorporate best practices.

Output: Optimized test cases and improved testing efficiency for better software quality.

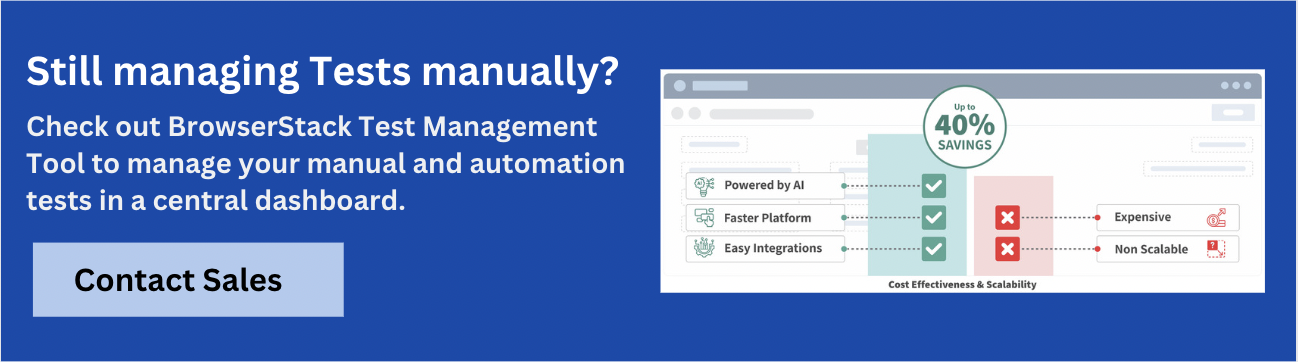

Enhance Test Authoring with AI-Powered Test Management

Efficient test authoring is crucial for maintaining software quality, but manual test creation can be time-consuming and prone to human error.

BrowserStack Test Management revolutionizes this process with AI-driven test authoring, enabling teams to create, manage, and execute test cases faster and more accurately.

Why Choose BrowserStack Test Management for Test Authoring?

- AI-Powered Test Case Creation: Generate test cases based on existing test suites, Jira stories, and historical test runs, ensuring comprehensive coverage.

- Smart Recommendations: Auto-populate test fields, including descriptions, expected results, and priority, reducing manual effort.

- Faster Test Case Authoring: Identify missing test cases and fill gaps effortlessly with AI assistance.

- Seamless Automation Integration: Link automated test executions to relevant test cases and track real-time results within the same platform.

- Customizable Insights & Reporting: With intuitive dashboards, you can gain visibility into test coverage, automation trends, and overall test health.

Additionally, teams can accelerate testing further with BrowserStack Low Code Automation. It allows to create and maintain AI-driven automated tests without coding, making automation accessible to every team member.

Its Low-Code Authoring Agent transforms natural language prompts into low-code test steps, delivering context-aware actions and validations, making test creation faster and more accessible for manual QA without writing code.

Why Choose BrowserStack’s Low-Code Authoring Agent?

With BrowserStack’s Low-Code Authoring Agent, you can significantly reduce the complexity of test writing. This tool empowers teams to create and maintain automated tests faster and more efficiently, without requiring deep coding expertise.

- Faster Test Creation: Test scripts are generated quickly through intuitive, low-code features, speeding up the test authoring process.

- Better Test Coverage: The AI-powered suggestions help identify areas of the application that may need more attention, improving overall test coverage.

- Collaboration Across Teams: Non-technical team members can also participate in the test creation process, enhancing collaboration and ensuring broader test coverage.

- Easy Maintenance: Low-code scripts are easier to maintain and modify as application features change, helping to keep test suites up-to-date with minimal effort.

Challenges of Test Authoring

Test authoring comes with several challenges that impact efficiency and test coverage. Addressing these obstacles is crucial for maintaining high-quality testing processes:

- Inadequate or Ambiguous Requirements: Unclear or evolving requirements make it difficult to define accurate test cases, leading to gaps in test coverage.

- Ineffective Test Coverage: Missing critical scenarios, edge cases, or non-functional tests due to lack of documentation or oversight.

- Time Constraints & Pressure: Tight deadlines and agile sprints often limit the time available for thorough test case creation and updates.

- Test Data Management Challenges: Difficulty in generating, maintaining, and managing realistic test data for comprehensive validation.

- Test Environment Limitations: Inability to replicate production-like conditions, leading to unrealistic or unreliable test cases.

- Lack of Standardization & Documentation: Inconsistent formats, unclear steps, and poor documentation reduce readability and maintainability.

- Maintenance Overhead: Frequent software updates increase the complexity of maintaining and revising test cases.

- Poorly Structured Test Cases: Unclear test steps, missing prerequisites, and lack of prioritization cause confusion and inefficiency.

- High Dependency on Manual Effort: Excessive reliance on manual test authoring limits scalability and introduces inconsistencies.

- Limited Collaboration & Communication: Poor coordination between developers, testers, and stakeholders leads to misunderstandings and inefficiencies.

Also Read: Test Case Vs Test Script

Best Practices for Effective Test Authoring

Effective test case writing ensures streamlined execution and high-quality software. Follow these best practices to enhance accuracy and efficiency:

- Clearly Defined Requirements: Involve the QA team early in requirement discussions and continuously collaborate with stakeholders.

- Use Standardized Templates & Formats: Structure test cases using clear templates for easy readability and consistency.

- Prioritize Test Cases Effectively: Categorize test cases based on risk, business impact, and release timelines.

- Keep Test Cases Short & Specific: Focus each test on a single functionality with unambiguous instructions.

- Ensure Comprehensive Test Coverage: Use multiple testing techniques like boundary-value analysis, equivalence partitioning, and cross-browser testing.

- Regular Peer Reviews: Conduct systematic reviews to validate clarity, correctness, and alignment with requirements.

- Manage Test Data Efficiently: Create reusable, realistic test datasets for accurate and repeatable testing.

- Leverage Test Automation: Automate repetitive test cases using tools like Selenium or Cypress for faster execution.

- Maintain Version Control: Keep test cases updated and use test management tools for tracking changes.

- Encourage Continuous Collaboration: Work closely with developers and stakeholders to resolve ambiguities quickly.

- Adopt Continuous Improvement: Analyze test metrics and refine processes based on insights.

- Define a Stable Test Environment: Ensure test environments replicate production settings for accurate validation.

Conclusion

Effective test authoring enhances accuracy, efficiency, and software reliability. A well-structured Test Management system ensures seamless organization, execution, and tracking of test cases, enabling teams to maintain software quality at scale.

By following best practices and continuously refining test strategies, teams can deliver stable, high-performing products. With BrowserStack Test Management, teams can streamline test case creation, execution, and reporting, while leveraging the Real Device Cloud to maximize coverage, accelerate bug detection, and drive faster, more reliable software releases.

Further enhance efficiency by converting test cases into low-code automated tests with the Low-Code Authoring Agent, allowing teams to create context-aware actions and validations effortlessly, without the need for coding.